When importing data through a data connector you may sometimes find a column named something like col_1 when you expected a name of col. In this blog post we will cover why this happens, preventative measures to take before importing to ensure it doesn't happen, as well as how to fix the issue post import after it has happened.

First, lets dig into why this issue happens in the first place. The best way to understand what is happening is through a demonstration, so lets run a data connector import and walk through what is happening with the data.

In this example we will use the TD Toolbelt command line to import files using the Amazon S3 Import Connector.

We prepared the following yml file to import files on S3. Note that the first letter of the column name is capitalized in the column configuration.

load_from_s3_blog.yml

in:

type: s3

access_key_id: XXXXXXX

secret_access_key: XXXXXXXXX

bucket: kohkitest

path_prefix: test_folder/testfile.csv

parser:

charset: UTF-8

newline: LF

type: csv

delimiter: ","

quote: "\""

escape: "\""

trim_if_not_quoted: false

skip_header_lines: 1

allow_extra_columns: false

allow_optional_columns: false

columns:

- {name: Id, type: long}

- {name: Name , type: string}

- {name: Company_id, type: long}

out: {mode: append}

exec: {}

filters:

- from_value: {mode: upload_time}

to_column: {name: time}

type: add_time

The target file, testfile.csv, placed on S3, is shown below.

id,name,company_id

1,Taro,111111

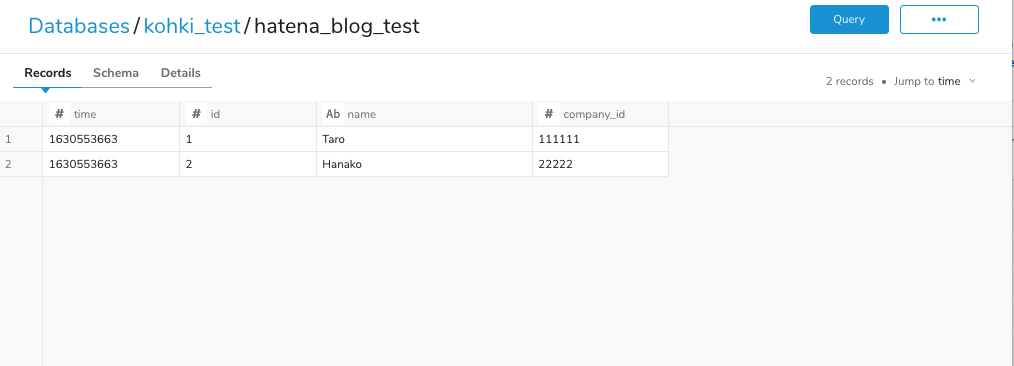

2,Hanako,22222The following table will be created after importing this .yml file.

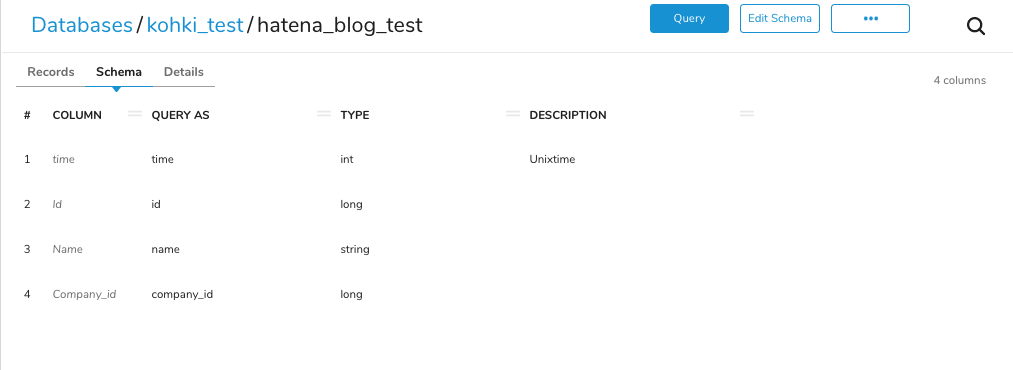

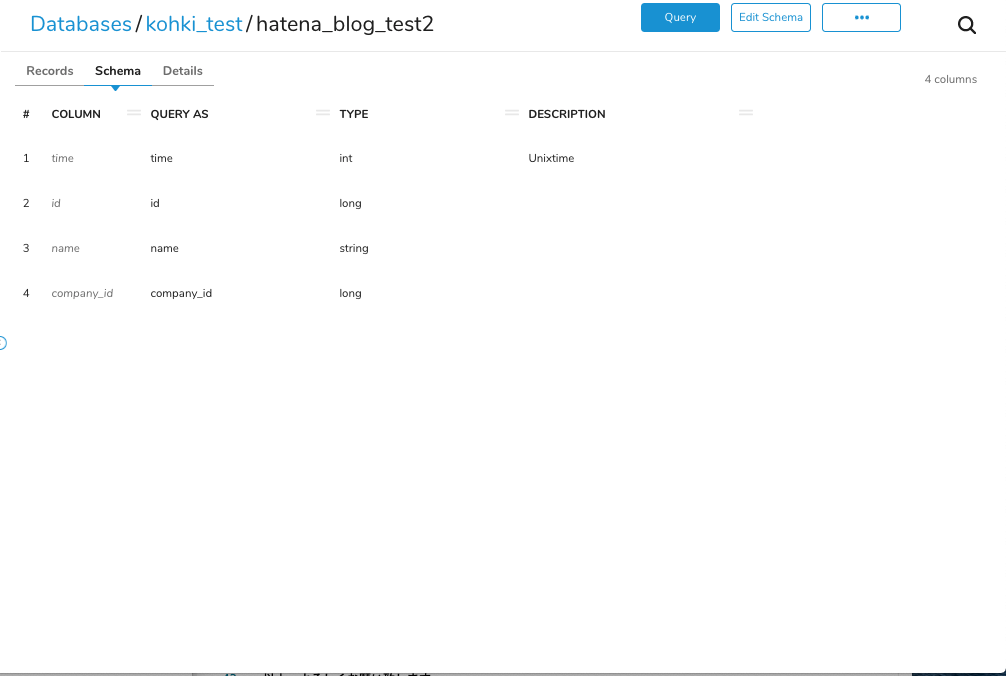

Looking at the Schema tab, it appears that the values for COLUMN and QUERY AS are different, with COLUMN set to the column name originally defined in the .yml file and QUERY AS set to the column name defined in all lowercase letters.

The reason is because Treasure Data allows only lower-case alphanumeric characters [a-z0-9_] and _ (underscore) in column names, so the actual column name defined in the .yml file is retained as COLUMN, and the original column name in the form of Alias called QUERY AS is retained as the column name on the table. It is the latter column name as Alias that is specified in SQL.

You can find more details about this in the Naming requirements and restrictions in Treasure Data documentation.

The important snippet is highlighted below:

only lower case letters, numbers, the _ (underscore)

Next, let's change the .yml file to align the column name definitions in columns to lowercase.

load_from_s3_blog.yml

in:

type: s3

access_key_id: XXXXXXX

secret_access_key: XXXXXXXXX

bucket: kohkitest

path_prefix: test_folder/testfile.csv

parser:

charset: UTF-8

newline: LF

type: csv

delimiter: ","

quote: "\""

escape: "\""

trim_if_not_quoted: false

skip_header_lines: 1

allow_extra_columns: false

allow_optional_columns: false

columns:

- {name: id, type: long}

- {name: name , type: string}

- {name: company_id, type: long}

out: {mode: append}

exec: {}

filters:

- from_value: {mode: upload_time}

to_column: {name: time}

type: add_time

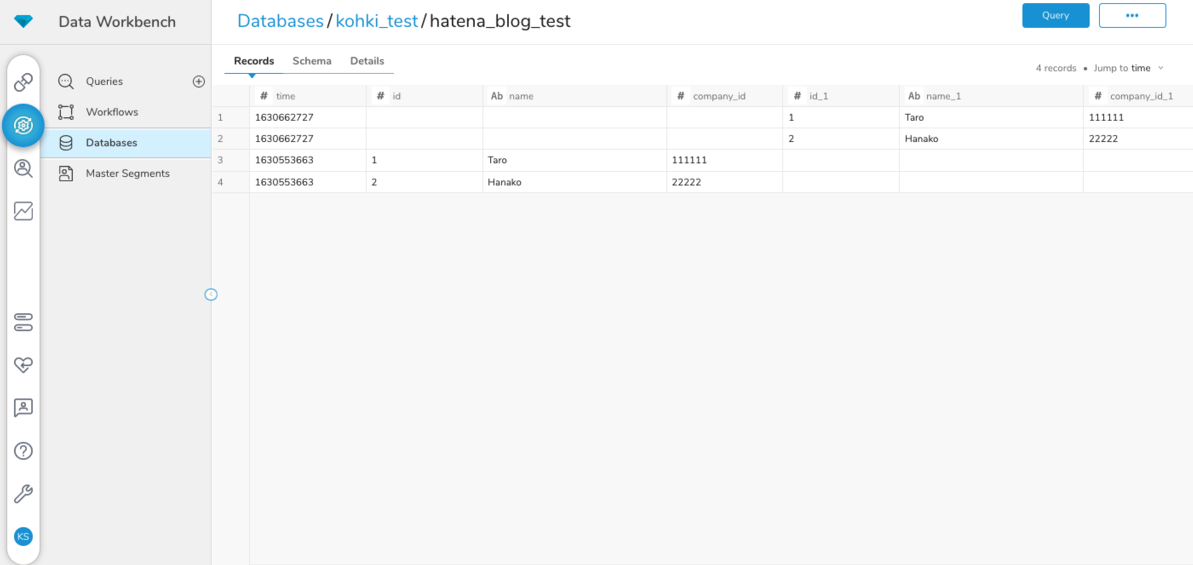

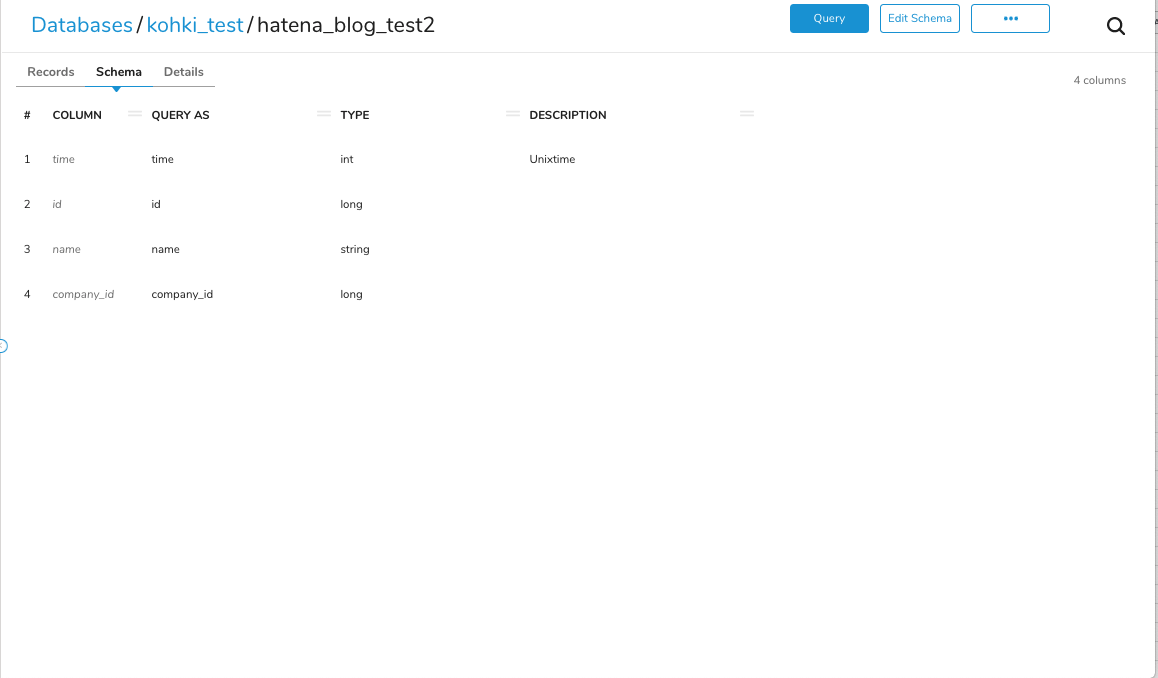

After performing the import again in this way, the destination table looked like this:

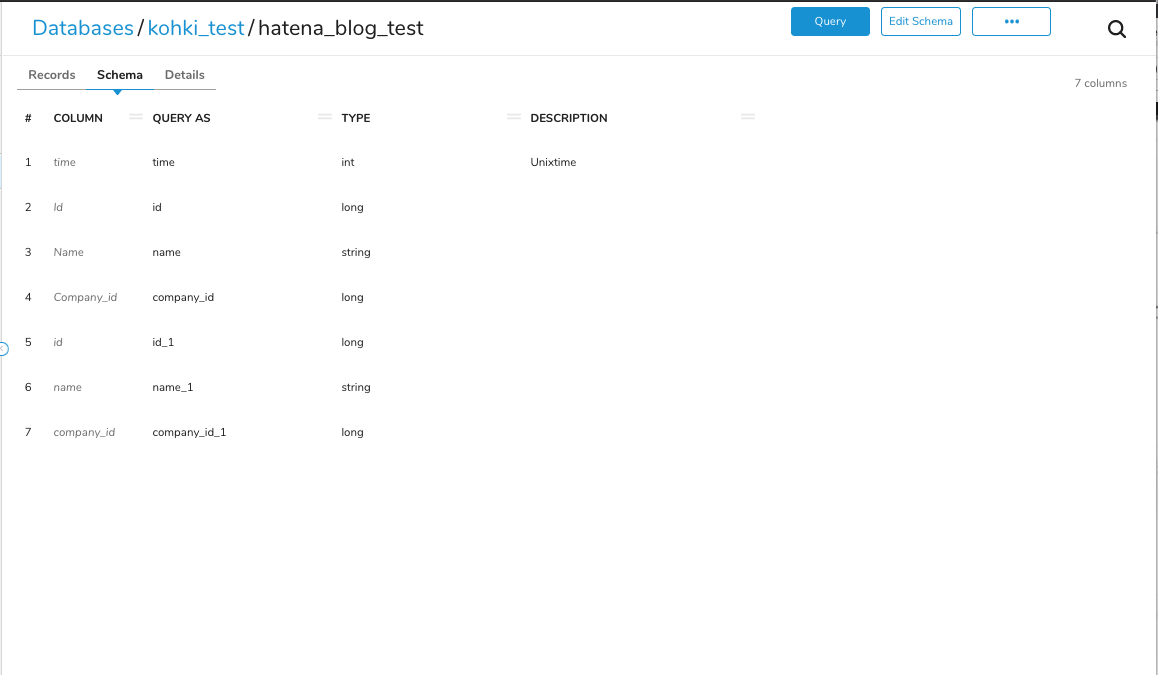

Checking the Schema tab, it seems that for column #6, a column named name_1 has been created with data in that column.

The reason for this behavior is that the combination of the COLUMN and QUERY AS values explained earlier—that is, the combination of the actual column name and the column name as Alias—is different, so the column is judged to be a separate column and consequently column name_1 is created.

There are two ways to solve this problem. The first is to prevent it from happening before you import the data by using the rename filter in your .yml file. The second is to fix the issue post data import by running a SQL query to reconfigure the data.

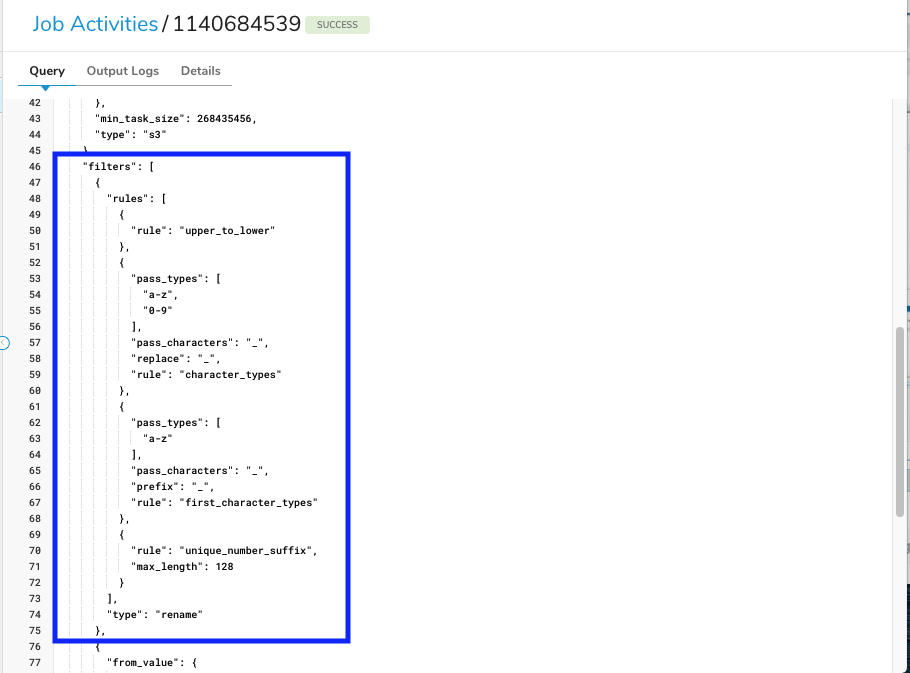

To prevent this from happening, it is safe to use the rename filter function. This function automatically renames actual column names defined in the .yml according to the specified rules.

It is generally a good idea to use this function to rename column name definitions to lowercase. Below you can find an example of how to rewrite the .yml file to include the rename filter function.

in:

type: s3

access_key_id: XXXXXXX

secret_access_key: XXXXXXXXX

bucket: kohkitest

path_prefix: test_folder/testfile.csv

parser:

charset: UTF-8

newline: LF

type: csv

delimiter: ","

quote: "\""

escape: "\""

trim_if_not_quoted: false

skip_header_lines: 1

allow_extra_columns: false

allow_optional_columns: false

columns:

- {name: Id, type: long}

- {name: Name , type: string}

- {name: Company_id, type: long}

filters:

- type: rename

rules:

- rule: upper_to_lower

- from_value: {mode: upload_time}

to_column: {name: time}

type: add_time

out: {mode: append}

exec: {}

If you import data using the above configuration you will see that both columns are lowercase, as intended, with no extra columns added.

By utilizing the rename filter in this way, you can rename column names based on your settings.

If a column like col_1 already exists, it is easy to rebuild the table by executing a SQL query against the table in which column name_1 occurred and store the data again with COLUMN and QUERY AS all in lower case.

In this example, the following query would be executed to rebuild the table so that all data is stored in the lowercase columns.

drop table if exists hatena_blog_test2;

create table if not exists hatena_blog_test2 as

select time,id,name,company_id

from hatena_blog_test

where id is not null

union all

select time, id_1 as id, name_1 as name, company_id_1 as company_id

from hatena_blog_test

where id_1 is not nullAfter creation, the table will look like this, with both COLUMN and QUERY AS aligned in lowercase. The table is now complete.

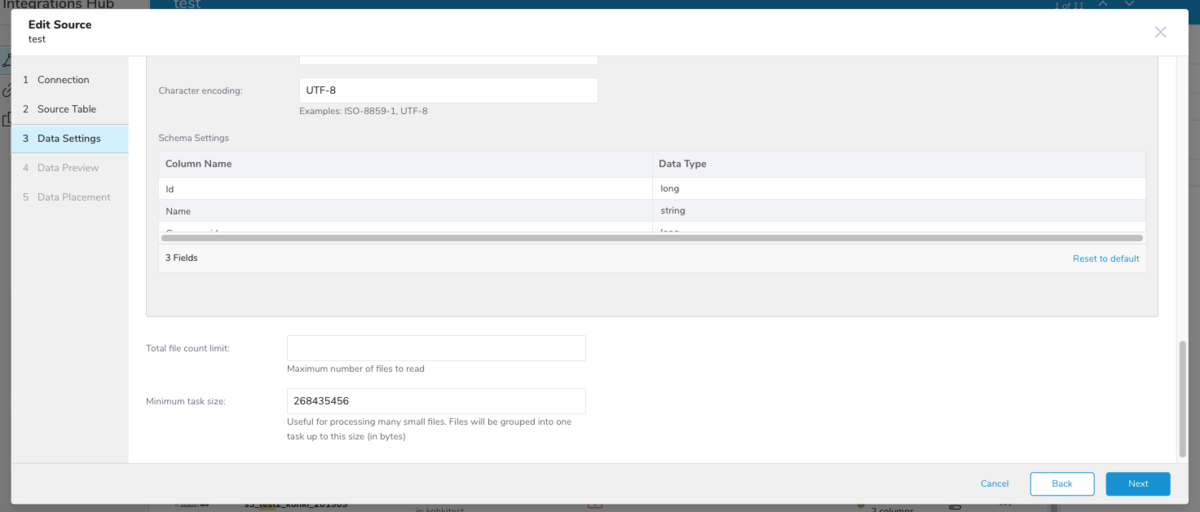

When importing from Sources on the Treasure Data console, the Schema Settings in the Data Settings section are used to define the actual column names set in the .yml file. You can change the column name to any name by clicking the column name.

In the case of jobs imported from Sources, if there are column names that contain uppercase letters, etc., the user does not have to specify them, but the rename filter is applied during the import process.

That's it. We've covered why this happens, how to prevent it,and how to clean up after you've already encountered the issue. We strongly suggest the liberal use of the rename filter as an ounce of prevention is worth a pound of cure.