As you might know, Treasure Data provides two types of query engines: Hive and Presto. Since the engines are different, there are natural differences regarding the syntax of query statements and supported functions. Of course, the basic syntax of typical functions like COUNT are the same. Although you can learn about the behavior of each function and syntax by looking at the documentation, I imagine that most of you will probably want to actually run the Hive or Presto job before implementation to production.

How, then, do you prepare your test data? Even in cases where a few rows of records are sufficient for testing, it is very troublesome to create an empty table and insert rows using the INSERT statement or to import a table via a bulk load job (e.g. using a Data Connector). Also, from an operational standpoint, it is not good to have an increasing number of tables that are used for testing even if the tables are deleted after the test is completed.

Therefore, let me show you in this article how to easily check the behavior of Hive or Presto jobs without creating a test table.

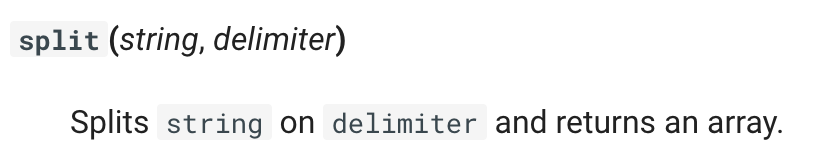

First, let me show this small test case. Suppose you wanted to use the SPLIT function in Presto. The documentation says the following:

If you don't know this function, it might be better to confirm the behavior before you use it in production query statements. Usually, you might create a new test table and store records in it for testing. After that you would run the following query statement.

SELECT SPLIT(col1, ',') as split_col

FROM table_a

WHERE col2=100;Of course, this will work for you. However, I think that it is excessive to create a new table in order to check the behavior of a function or a simple test case. Instead, consider passing a string directly to the argument of the SPLIT function instead of specifying a column name? It is easy to assume that a FROM clause is required, but the query statement works without it. Here is an example:

SELECT SPLIT('aaaa,bbbb,cccc,dddd', ',') as split_col;To see an example of the output of this query from the TD Console, go to Data Workbench > Queries > New Query. Select a database, and then enter your SQL query. Then select Run. Here is an example of the output using TD Console:

Here is an example of the output using the TD CLI or cURL:

curl --location --request POST 'https://api.treasuredata.com/v3/job/issue/presto/sample_test_db' --header 'Accept: application/json' --header 'Authorization: TD1 123/abcdef•••••••••••••••••••••••0123456789' -d "query=SELECT SPLIT('aaaa,bbbb,cccc,dddd', ',') as split_col;"

> {"job":"1838627512","job_id":"1838627512","database":"sample_test_db"}

curl --location 'https://console.treasuredata.com/v3/job/result/1838627512' --header 'Content-Type: application/json' --header 'Authorization: TD1 123/abcdef•••••••••••••••••••••••0123456789'

> "[""aaaa"", ""bbbb"", ""cccc"", ""dddd""]"td query -d sample_db_test -T presto -w "SELECT SPLIT('aaaa,bbbb,cccc,dddd', ',') as split_col;"

>

Job 1838376808 is queued.

Use 'td job:show 1838376808' to show the status.

started at 2023-06-06T19:43:51Z

presto version: 350

executing query: SELECT SPLIT('aaaa,bbbb,cccc,dddd', ',') as split_col

.

.

.

.

Status : success

Result :

WARNING: the job result is being downloaded...

+-------------------------------+

| split_col |

+-------------------------------+

| ["aaaa","bbbb","cccc","dddd"] |

+-------------------------------+

1 row in setIf you would like to check the behavior of an aggregation function via a query, a single row is not enough for testing. Therefore, you need to prepare multiple rows as test data. Let me show you how to achieve this without creating a test table using Presto and Hive.

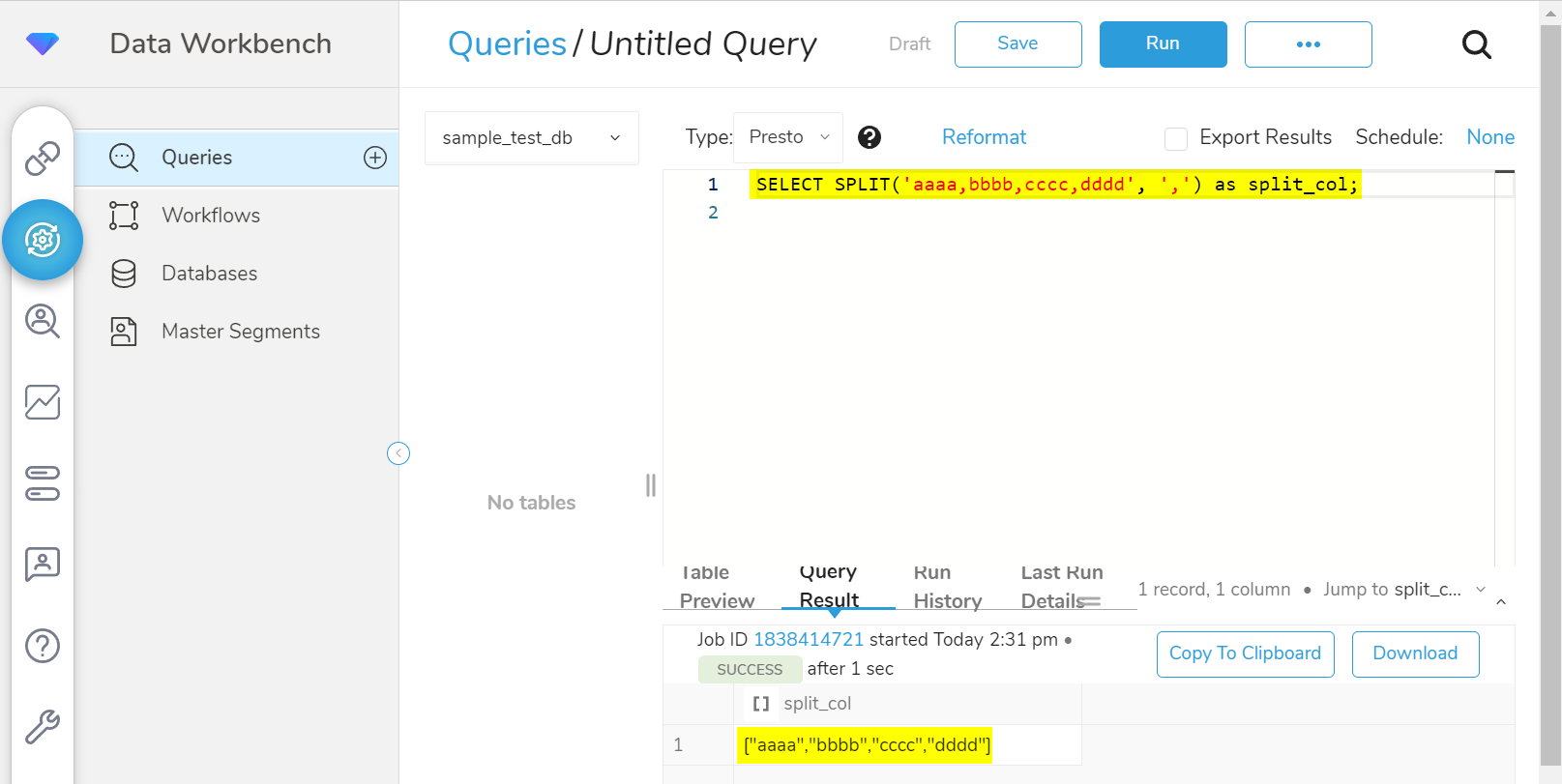

In the case of Presto, you can prepare multiple rows by using VALUES. You can define the multiple rows after the VALUES in the form (<first_row>), (second_row), ..., (nth_row). Regarding column names, you can define them by aliasing them with AS.

SELECT *

FROM (

VALUES

(1, 100, 'test1'), -- first row

(1, 200, 'test2'), -- second row

(2, 200, 'test3'), -- third row

(2, 400, 'test4'), -- fourth row

(2, 600, 'test5') -- fifth row

) AS t(col1, col2, col3) -- define column names

;Here is an example of the output using TD Console:

Here is an example of the output using the TD CLI or cURL:

curl --location --request POST 'https://api.treasuredata.com/v3/job/issue/presto/sample_test_db' --header 'Accept: application/json' --header 'Authorization: TD1 123/abcdef•••••••••••••••••••••••0123456789' -d "query=SELECT * FROM (VALUES (1, 100, 'test1'),(1, 200, 'test2'),(2, 200, 'test3'),(2, 400, 'test4'),(2, 600, 'test5') ) AS t(col1, col2, col3);"

> {"job":"1838631936","job_id":"1838631936","database":"sample_test_db"}

curl --location 'https://console.treasuredata.com/v3/job/result/1838631936' --header 'Content-Type: application/json' --header 'Authorization: TD1 123/abcdef•••••••••••••••••••••••0123456789'

>

1,100,test1

1,200,test2

2,200,test3

2,400,test4

2,600,test5td query -d sample_db_test -T presto -w "SELECT * FROM (VALUES (1, 100, 'test1'),(1, 200, 'test2'),(2, 200, 'test3'),(2, 400, 'test4'),(2, 600, 'test5')) AS t(col1, col2, col3)"

>

Job 1838434823 is queued.

Use 'td job:show 1838434823' to show the status.

started at 2023-06-06T21:00:40Z

presto version: 350

executing query: SELECT * FROM (VALUES (1, 100, 'test1'),(1, 200, 'test2'),(2, 200, 'test3'),(2, 400, 'test4'),(2, 600, 'test5')) AS t(col1, col2, col3)

.

.

.

.

Status : success

Result :

WARNING: the job result is being downloaded...

+------+------+-------+

| col1 | col2 | col3 |

+------+------+-------+

| 1 | 100 | test1 |

| 1 | 200 | test2 |

| 2 | 200 | test3 |

| 2 | 400 | test4 |

| 2 | 600 | test5 |

+------+------+-------+

5 rows in setThe advantage of this approach is that you can share the example with your colleague without the existing table. Of course, if you want a few dozen rows of test data, it may be easier to prepare a table instead of this example.

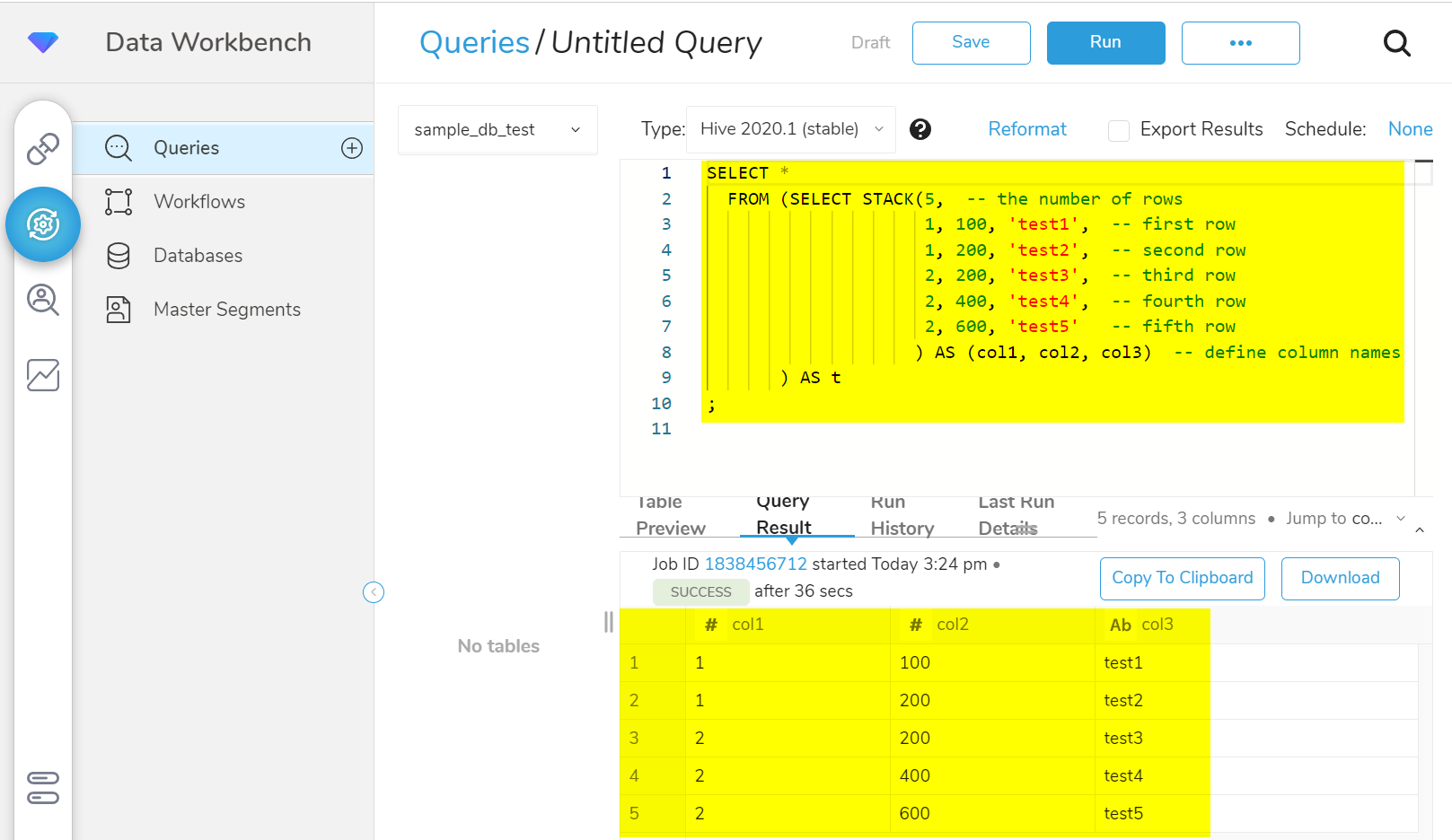

In the case of Hive, you can prepare multiple rows by using STACK. Unlike VALUES in Presto, you need to specify the number of rows as the first argument and not enclose each row in parenthesis.

SELECT *

FROM (SELECT STACK(5, -- the number of rows

1, 100, 'test1', -- first row

1, 200, 'test2', -- second row

2, 200, 'test3', -- third row

2, 400, 'test4', -- fourth row

2, 600, 'test5' -- fifth row

) AS (col1, col2, col3) -- define column names

) AS t

;Here is an example of the output using TD Console:

Here is an example of the output using the TD CLI or cURL:

curl --location --request POST 'https://api.treasuredata.com/v3/job/issue/hive/sample_test_db' --header 'Accept: application/json' --header 'Authorization: TD1 123/abcdef•••••••••••••••••••••••0123456789' -d "query=SELECT * FROM (SELECT STACK (5,1, 100, 'test1',1, 200, 'test2',2, 200, 'test3',2, 400, 'test4',2, 600, 'test5') AS (col1, col2, col3) ) AS t;"

> {"job":"1838639145","job_id":"1838639145","database":"sample_test_db"}

curl --location 'https://console.treasuredata.com/v3/job/result/1838639145' --header 'Content-Type: application/json' --header 'Authorization: TD1 123/abcdef•••••••••••••••••••••••0123456789'

>

1,100,test1

1,200,test2

2,200,test3

2,400,test4

2,600,test5td query -d sample_db_test -T hive -w "SELECT * FROM (SELECT STACK(5,1, 100,'test1',1, 200,'test2',2, 200,'test3',2, 400,'test4',2, 600,'test5')AS(col1, col2, col3)) AS t;"

>

Job 1838369237 is queued.

Use 'td job:show 1838369237' to show the status.

Hive history file=/mnt/hive/tmp/3867/hive_job_log_5c427fc1-ee26-4e52-9d8f-fa0c308e206e_123537841.txt

Job is running in resource pool: hadoop2 with priority: default

Execution engine version: 2020.1

TD Hive worker version: release-0.2.4-11028

.

.

.

.

Status : success

Result :

+------+------+-------+

| col1 | col2 | col3 |

+------+------+-------+

| 1 | 100 | test1 |

| 1 | 200 | test2 |

| 2 | 200 | test3 |

| 2 | 400 | test4 |

| 2 | 600 | test5 |

+------+------+-------+

5 rows in setThe techniques described in this article will help you when you attempt to confirm the behavior of a function or query statement using either Hive or Presto. When you encounter unexpected behavior, use these methods to reproduce the behavior, and then you can share the query statement with your team or with support.