The Web Traffic Analytics package enables CDP users to track important web traffic Key Performance Indicators (KPIs). It enables filtering and comparing web traffic from Country/City, Device/Browser, utm_params such as medium, source, campaign, datetime, and others. Some of the Out-of-Box (OTB) metrics include:

Total pageviews for the website

Weekly page visits

Top visited page sections

Pageviews heatmap by country

Total pageviews by device

Weekly page visits by channel

Total and weekly conversions by medium

Campaign effects on conversion

To add custom metrics after installation, contact TD support.

- Track web-traffic KPIs and gain valuable insights to monitor resources.

- Track performance of marketing channels and campaigns on website traffic and conversions

- Measure and communicate the business value of CDP across your organization.

- TD Account must have Treasure Insights (TI) enabled.

- TD user installing the package must have Edit Data Models & Dashboards TI permissions in TD.

- TD user installing the package must have Workflow Edit & Run permissions in TD and must have access to the databases where the tables with the KPI metrics live.

- It is recommended that you monitor datamodel size monthly in the V5 console under the Insights Models tab and ensure it does not exceed 4 GB over time. This should not happen with the Out-of-Box (OTB) configuration, contact TD support in the rare event that this occurs.

Perform the following main steps for configuring the package installation in the Marketplace UI. Note that each step in the UI also comes with notes and examples that can guide you through the installation process.

Enter parameters such as MasterAPI Key, API Endpoint, Working Database, Project Name, which will be used to install and run the package in your TD account and save the output tables in the provided database name.

Treasure Data strongly recommends that you take advantage of time-based partitioning. Refer to the performance tuning article for more information. Not using time-based filtering in SQL SELECT statements can cause inefficient full table scans that affect query performance.

If you want to create a new working database, create one in TD and provide the name. Ensure that a project name is new and unique, which reduces the risk of losing existing workflows.

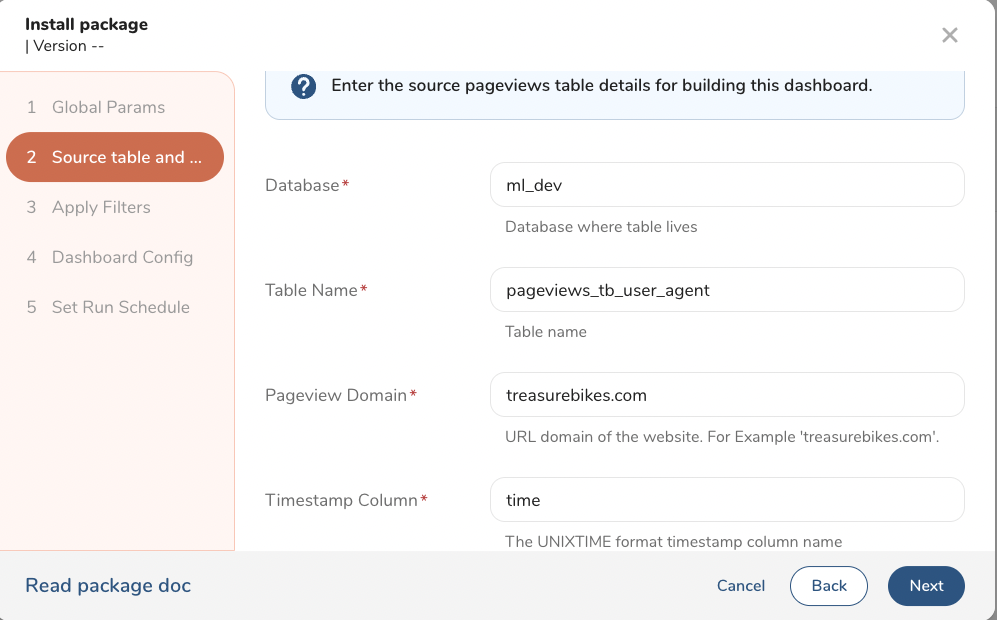

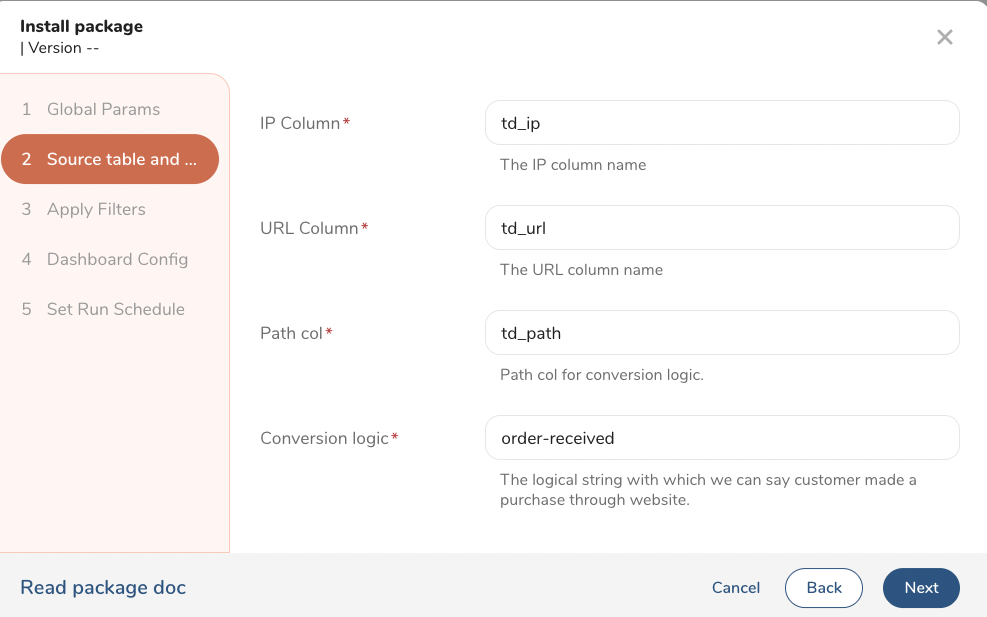

Enter your source pageview database and pageview table names, pageview domain name. For example, treasurebikes.com, pageview unix timestamp, url, ip_address columns from the source pageview table. For conversion logic, provide path column name and conversion logic logical string in the path column that identifies if a customer made purchase through website. For example, order-received.

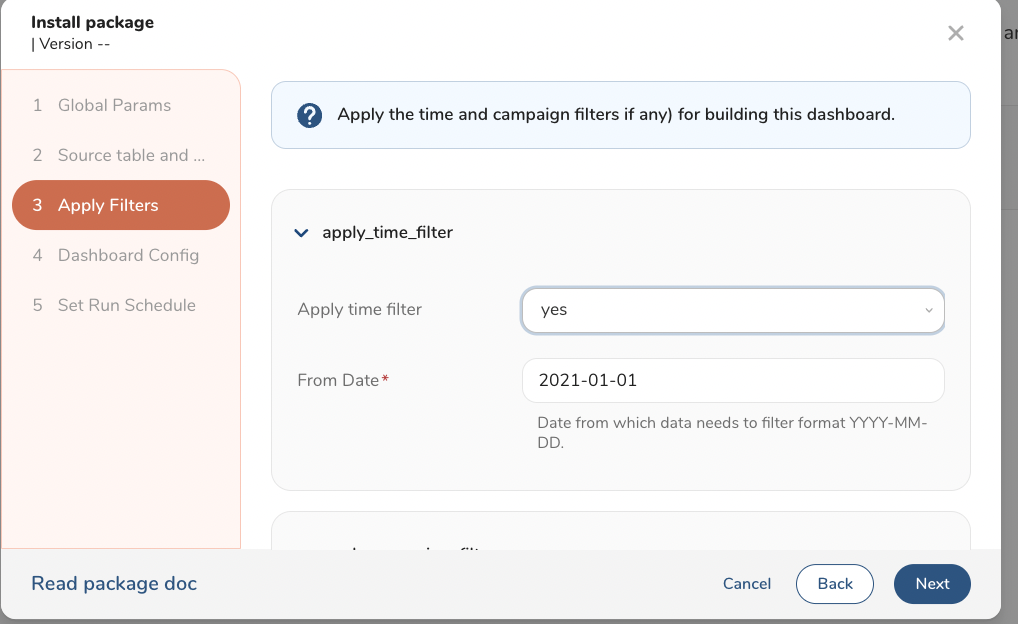

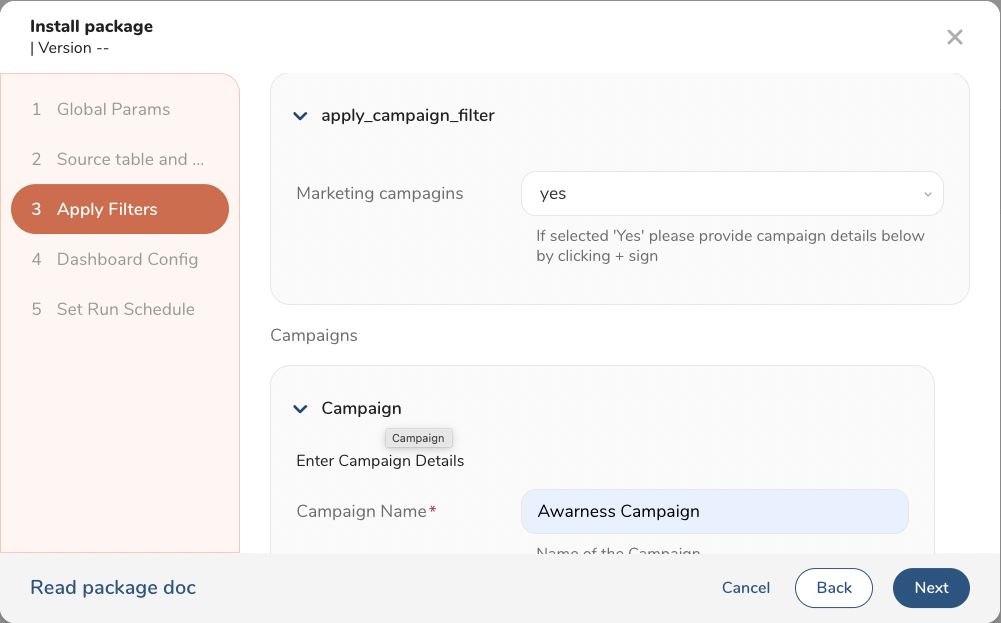

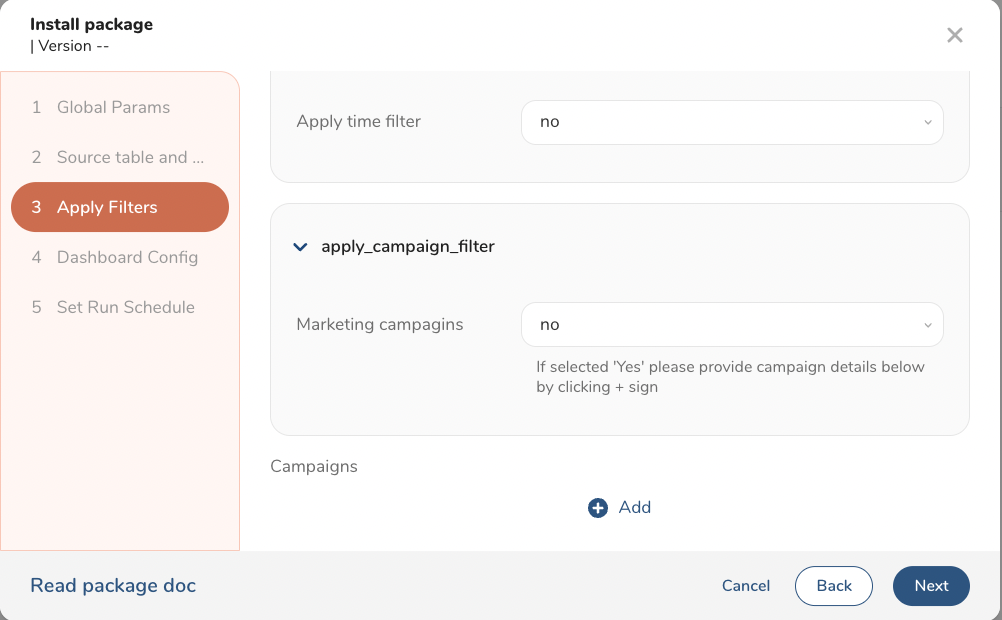

Define custom filters if you want to define specific dates from which KPI metrics will be tracked. For example, configure marketing campaigns with a start and end date in order to track metrics across campaigns in that period.

Provide a list of emails of TD users who should have access to the dashboard after the package is installed.

All emails on the list, should have at least View Dashboard permissions in Treasure Insights, otherwise dashboard build might fail.

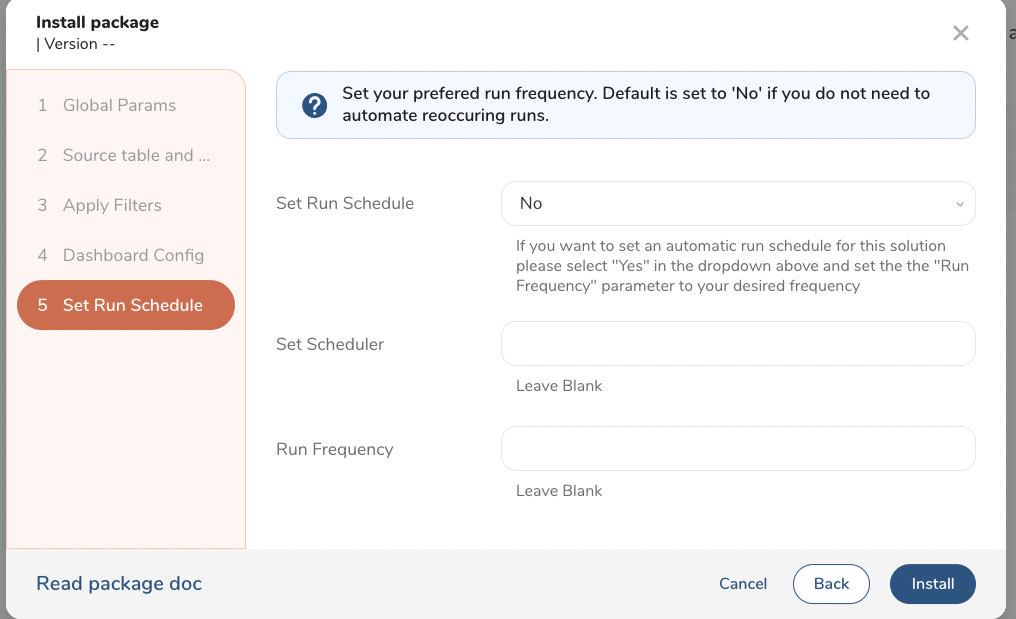

You can opt to schedule the dashboard refresh on a daily, weekly or any other custom schedule. You can also decide to skip scheduling the solution during installation and do that later by locating the installed project and entering the scheduling parameters in the main web_analytics_dashboard.dig file.

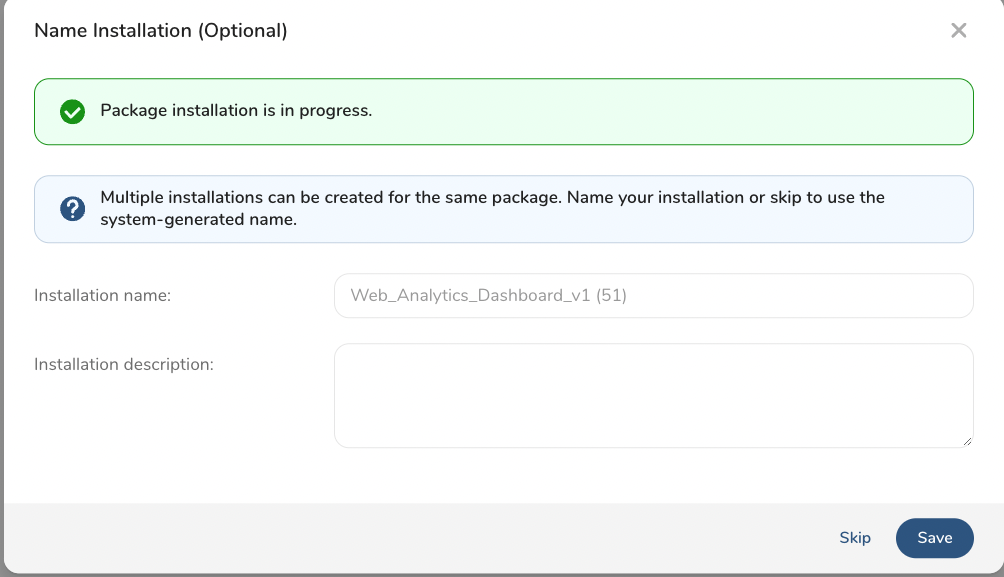

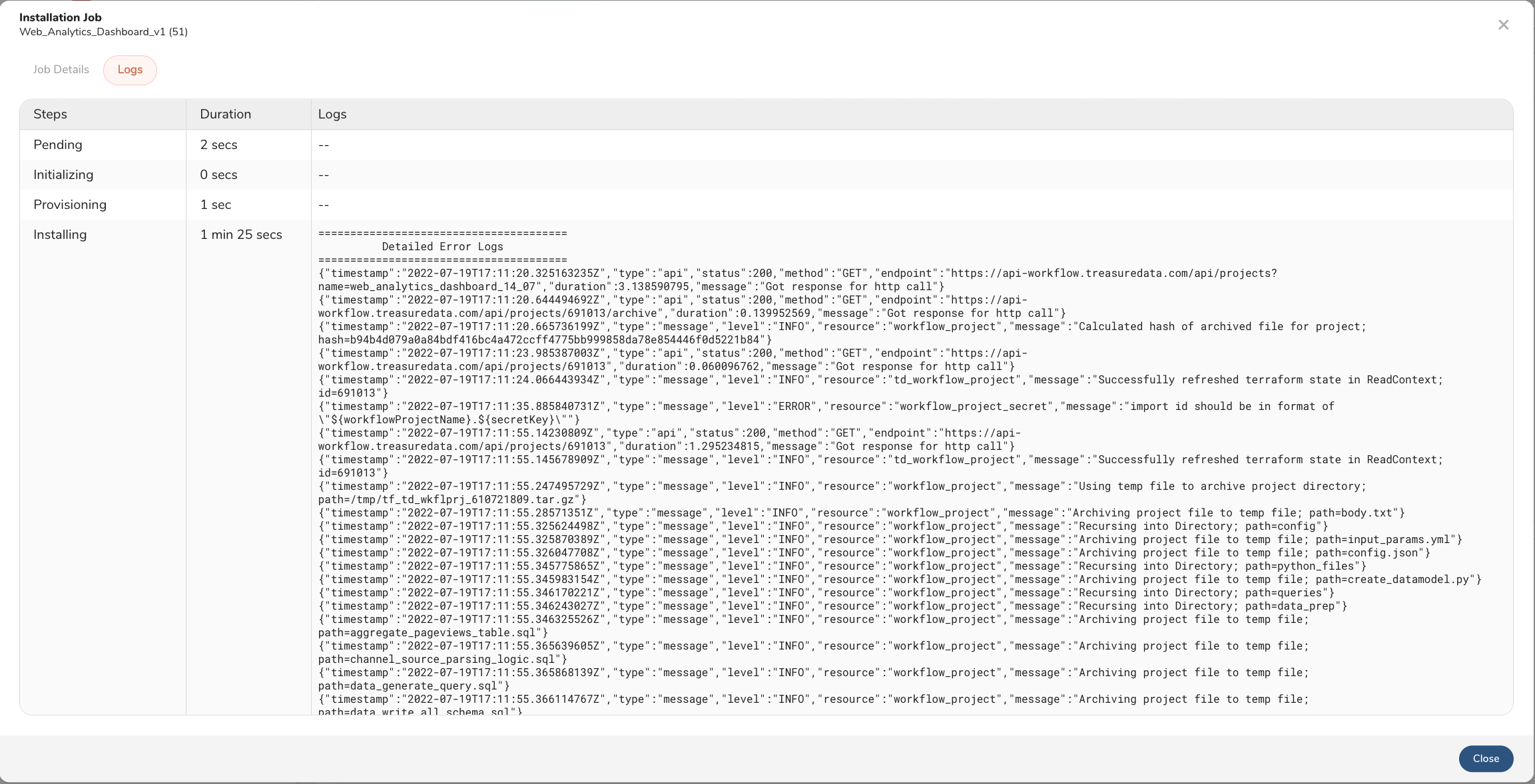

After you fill out the prameters in the UI for the first five steps, you will be presented with an Install button. Select that and you will be linked to a screen where you can monitor the installation logs. If you get a success message, it means that all parameters were accepted and the package was installed succesfully in your TD account. If you get an error message, check the Error Logs for more details. If you cannot determine the reason of the failure, contact TD technical support.

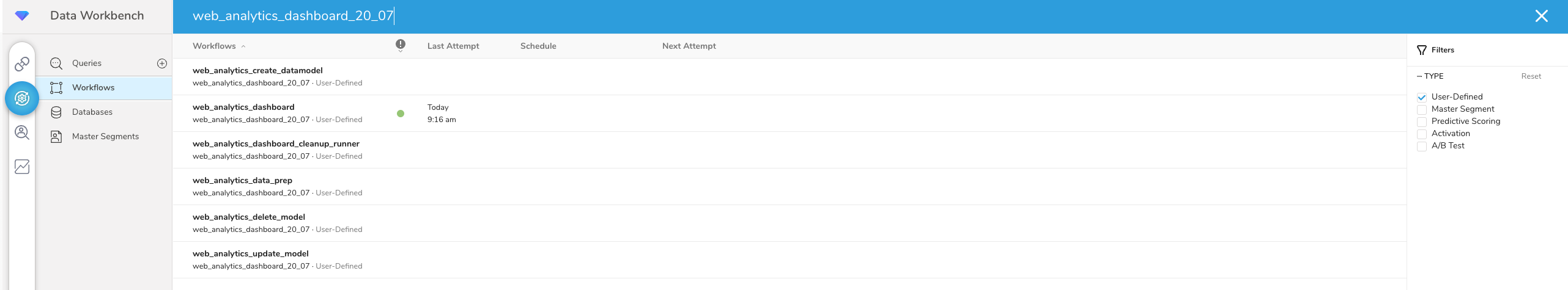

A succesful package installation will trigger a workflow run in TD, which might take anywhere between 10 to 20 minutes depending on the data volumes and your compute units. You must wait for the Workflow Run to complete before you can explore the output tables and the Web Traffic Analytics dashboard. If you want to monitor the workflow runs, navigate to Data Workbench > Workflows in the TD Console and search for the web_analytics_dasboard workflow. If the workflow is still running, you should see a blue dot under Run History, and if the run was succesfull, you will see a green dot. If the workflow throws an error, you will see a red dot and you can go explore the Error Logs and contact TD support for help.

The workflow is set up, so that the end user only needs to configure the .yml files in the config\ folder and the config.json file in the main project folder. The workflow can be ran to execute end-to-end code automatically. In fact, these files are automatically configured for you during the package installation, because the parameters you are entering in the UI will be dynamically populated in the proper lines inside the yml and json configuration files.

However, you can modify these parameters after installation manually when further customization of the solution is needed to fit additional data context and use cases that might not be covered by the OTB configuration. The details on how to configure each yml or configuration file are as follows:

After installation, manual customizations are ONLY recommended for technical users with some experience with DigDag and SQL/Python. If you do not have such resources available, contact TD support and we can help customize some of the code to your needs, as possible.

config/input_params.yml - Controls important parameters, which are often applied globally across most of the data sources and processes in the workflow.

##################################################################### ########################## GLOBAL PARAMS ############################ ##################################################################### globals: sink_database: test_suraj #database where the model output tables are saved main_id_key: td_canonical_id #main unique identifier column_name api_endpoint: 'api.treasuredata.com' #API endpoint for the TD account where customer data lives model_config_table: 'datamodel_build_history' #table where model buildout history and OIDs are saved run_data_prep_wf: 'no' #controls if data preparation workflow needs to be run create_model: 'no' #controls if new data model will be created using Python Scripts and config.json params update_insights_model: 'no' #controls if data model that was created for Dashboard will be built and updated cleanup_temp_tables: 'yes' #cleansup temporary tables delete_model: 'no' #controls if data model will be deleted project_prefix: Web_analytics #all output tables and project sub-workflows will start with this prefix ############# INPUT PAGEVIEWS TABLE ######################### pageviews_table_db: ml_dev #source database pageviews_table_name: pageviews_tb_user_agent #source table pv_main_domain: 'treasurebikes.com' #website to parse traffic for pv_unixtime_col: time #web visits event time col pv_ip_col: td_ip #web visits ip col pv_url_col: td_url #web visits url col pv_conversion_logic: REGEXP_LIKE(td_path, 'order-received') #web visits logic for conversion ################ CUSTOM DATA FILTER PARAMS ##################### apply_time_filter: 'yes' #data filter yes/no pertaing to time start_date: 2021-01-01 #date to filter data from filter_regex: WHERE REGEXP_LIKE(td_language, 'uk|en') ######### TIME FILTER CAMPAIGN LABELS ###################### create_custom_date_flags: 'no' #campaign filter yes/no if any date_all_flags: - label: 'None' start_date: '0000-00-00' end_date: '0000-00-00' date_flags: "<%= config.apply_campaign_filter.apply_campaign_filter2 %>" #campaign filter yes/no if any date_flags_new: #campaigns and their start and end date - label: 'Awareness Campaign' start_date: '2021-02-21' end_date: '2022-03-01' - label: 'Retargeting Campaign' start_date: '2021-03-01' end_date: '2022-03-01' ####################### TEMPORARY TABLES LIST ######################### temporary_tables: - pageviews_tempconfig.json - Controls important parameters used by Python Custom Scripting

.pyfiles to create the final JSON for the datamodel and build and share it via the API.{ "model_name": "test_web_analytics_insight_model3" (name of datamodel) , "model_tables": [ {"db":"test_suraj","name":"web_analytics_global_time_filter"}, {"db":"test_suraj","name":"web_analytics_pageviews_aggregate"}, {"db":"test_suraj","name":"web_analytics_path_stats"}, {"db":"test_suraj","name":"web_analytics_unqiue_visitors"} ] (list of tables to be added to datamodel) , "shared_user_list": ["saisuraj.argula+psdemo@treasure-data.com"] (list of users to share datamodel with) , "change_schema_cols": {"date": ["event_date"], "text": ["ENTER_NAME"], "float": ["ENTER_NAME"], "bigint": ["ENTER_NAME"]} (list of columns you want to change datatype from raw table to datamodel. Ex. in "date" you provide column names that will be converted to `datetime`) , "join_relations": {"pairs": [ {"db1": "test_suraj","tb1":"web_analytics_global_time_filter","join_key1":"event_date","db2": "test_suraj","tb2":"web_analytics_pageviews_aggregate","join_key2":"event_date"}, {"db1": "test_suraj","tb1":"web_analytics_global_time_filter","join_key1":"event_date","db2": "test_suraj","tb2":"web_analytics_path_stats","join_key2":"event_date"}, {"db1": "test_suraj","tb1":"web_analytics_global_time_filter","join_key1":"event_date","db2": "test_suraj","tb2":"web_analytics_unqiue_visitors","join_key2":"event_date"} ] (if any joins were required you can add a list of table_name:join_key pairs) } }

web_analytics_dashboard.dig - Runs the main project workflow that triggers an entire project execution end-to-end, including all sub-workflows and queries in project folder. Contains scheduling parameters of the workflow.

web_analytics_dashboard_data_prep.dig - Reads from the

input_params.ymlfile and executes a list of tasks and queries that will extract data from the source tables and then aggregating metrics to store the aggregated data in sink database.web_analytics_dashboard_datamodel_create.dig - Creates the datamodel that powers the Dashboard by reading from the

config.jsonfile.web_analytics_dashboard_update_model.dig - Updates the existing datamodel or dashboard with the latest data generated by each workflow run.

web_analytics_cleanup_runner.dig - Cleans up the temporary tables created in the sink databases in the process of creating the datamodel.

web_analytics_delete_model.dig - Optional workflow that comes with the package and can be used to delete the datamodel created in the past to recreate a new one.

- web_analytics_global_time_filter - Table of all dates and campaign date filters.

| time | event_date | campaign_date_filter |

|---|---|---|

| 1654112366 | 2021-02-13 | All Others |

- web_analytics_pageviews_aggregate - This table aggregates unique vistors for page and conversions across channel, source, campaign date.

| time | event_date | channel_final | source_final | campaign_final | referal_domain | country_ip | city_ip | device | unique_events | total_conversions |

|---|---|---|---|---|---|---|---|---|---|---|

| 1654112366 | 2021-02-07 | social | retargeting | googlead.doubleclick.net | India | 1Delhi | Other Crawler | 1 | 0 |

- web_analytics_path_stats - This table aggregates the daily total visitors and unique vistors for each of paths.

| time | event_date | td_path | total_visitors | unique_visitors |

|---|---|---|---|---|

| 1654112366 | 2021-03-25 | /shop/accessories/ | 22 | 21 |

- web_analytics_unqiue_visitors - This table aggregates the daily visitors and unique visitors.

| time | event_date | total_uniques | total_visitors | unique_visitors |

|---|---|---|---|---|

| 1654112366 | 2021-03-11 | 363 | 127 | 65 |

The Web Traffic Analytics dashboard reads from an Insights Model build from the parameters listed in the config.json file. By default, this includes the four tables listed - web_analytics_global_time_filter, web_analytics_pageviews_aggregate, web_analytics_path_stats and web_analytics_unqiue_visitors. More tables and metrics can be added on a customer's request to fulfill additional use case requirements.

Data engineers or analysts who are comfortable with Workflows and Treasure Insights can easily add more custom metrics to this dashboard by using the workflow configuration files and custom SQL queries.

import Pageviews from './img/Pageviews.png' import Pageviewsbydeviceandchannel from './img/Pageviewsbydeviceandchannel.png' import Conversions from './img/Conversions.png' import Campaignmetrics from './img/Campaignmetrics.png'

<Carousel2>

High Level Summary of Total pageviews , weekly pageviews total and unique, Top 10 page sections total visits and unique vistors, pageview heatmap by country further these widgets can be filtered by campaigns, country/city, device/browser.

Provides Widgets for KPIs such as pageviews by device and weekly pagevisits by channel further these widgets can be filtered by campaigns, country/city, device/browser.

Provides Widgets for tracking Total conversions by medium and weekly conversions by medium further these widgets can be filtered by campaigns, country/city, device/browser.

Provides Widgets for tracking camapign effects on conversions, campaign effect on new user acquisition further these widgets can be filtered by campaigns, country/city, device/browser.

extract_pageviews - Contains

event_date, this, that,referral_domainand etc.extract_pageviews_date_filter.sql - This query reads the data from the source database and table extracting parameters like country, city from ip column, utm parameters from url column and browser details from

user_agentcolumn. Further, data is filtered by start date provided while installing the project.WHERE TD_TIME_RANGE(${pv_unixtime_col}, '${start_date}')If not provided, this query is bypassed by following query without any filter.

extract_pageviews_temp.sql - This query reads the data from the source database and table extracting parameters like country, city from ip column, utm parameters from url column and browser details from

user_agentcolumn.channel_source_parsing_logic.sql - This query reads the data from the source database and table extracting parameters for device, channel and source, campaign, referral domain, so on.

aggregate_pageviews_table.sql - This query aggregates pageviews and conversions on

event_date,channel,source,campaign,referal_domain,country_ip,city_ip, device. This table powers the Web Traffic Analytics Dashboard widgets and filters.path_visits.sql - This query aggregates total visitors and unique visitors on date and paths. This table powers the Web Traffic Analytics Dashboard widgets and filters.

unique_visitors.sql - This query aggregates total visitors and unique visitors on event date, this also counts total unique visitors, so far. This table powers the Web Traffic Analytics Dashboard widgets and filters.

global_time_filter.sql - This query generates all date and campaigns on those days from the input parameters. This table powers the Web Traffic Analytics dashboard widgets and filters.

After you get the success message after a package installation, you can locate the installed workflow by going to the Console UI and navigating to the Data Workbench > Workflows tab on the left. The name of the project should be the same as the name that you gave it in the Project Name tab during package installation. Use this documentation as a guide on how you can modify the input_params.yml file to apply additional filters or add more metrics to the output tables, which can be manually added to the Template dashboard.

Find the installed workflow project using the guide from the paragraph above and identify the web_analytics_dashboard.dig file, which is the main project file that runs the entire end-to-end solution. Select Launch Project Editor on the top right in the console and then select Edit File on the bottom right. At the very top of the file, you might see an empty section as such:

###################### SCHEDULE PARAMS ##################################To schedule the workflow, add syntax so that the final syntax looks as follows:

###################### SCHEDULE PARAMS ##################################

timezone: UTC

schedule:

daily>: 07:00:00The above example will automatically run the workflow every day at 7:00 am UTC time. For more scheduling options, refer to the DigDag documentation at Setting up a Schedule.

No. Output Tables, Workflow or Dashboards created by the package will NOT be deleted if the package is uninstalled.

Contact your TD representative and they will be able to dedicate an Engineering resource that can help guide you through the installation process and help you with any troubleshooting or code customizations, if needed.