Support for Hive 4 is now available on the Treasure Data console.

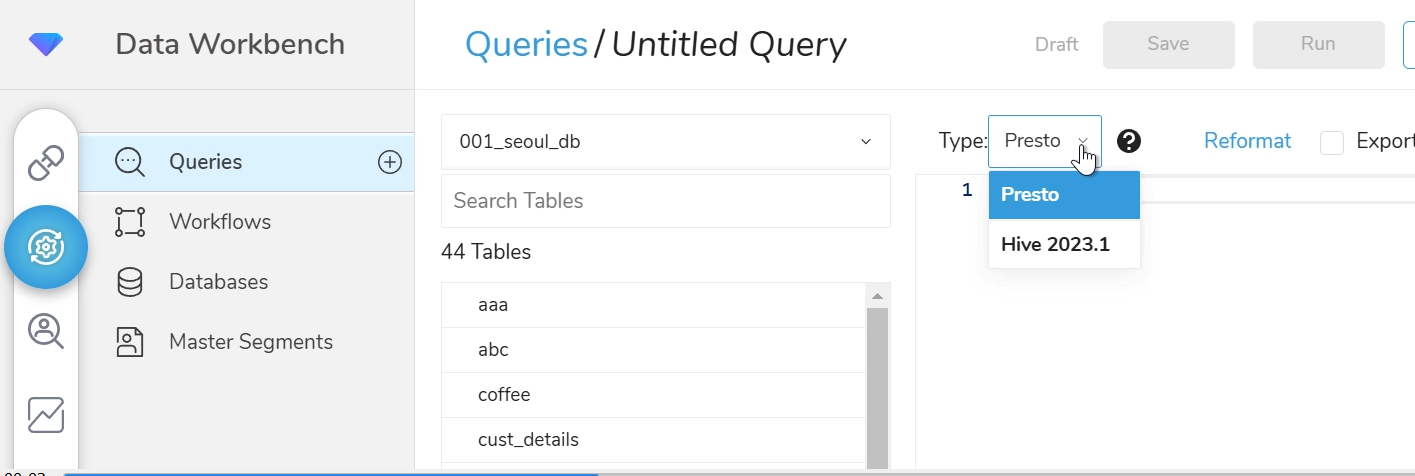

After your account has been enabled for Hive 4 functionality, the drop-down menu for Type in the Queries window will include Hive 2023.1. Selecting this option will cause TD to process your query with Hive 4.

Using TD Toolbelt, you can specify a Hive 4 query by including the command line option

-T hive --engine-version stable.

For example, a Hive 4 query would look something like this:

td query -w -d sample_datasets "SELECT COUNT(1) FROM www_access" -T hive --engine-version stableUsing the Python Client you can specify a Hive 4 query by specifying the engine_version as stable. For example you can schedule a job using Hive 4 with the following Python code:

import tdclient

with tdclient.Client() as td:

job = td.query(

"sample_datasets",

"SELECT COUNT(1) FROM www_access",

type="hive",

engine_version="stable",

)

job.wait()

for row in job.result():

print(repr(row))import tdclient

with tdclient.Client() as td:

td.create_schedule(

"sched-example",

{

"type": "hive",

"engine_version": "stable",

"query": "select count(1) from www_access",

"database": "sample_datasets",

"cron": "0 13 * * *",

},

)TD has added support for the following SQL features in Hive 4:

Quotes a string to produce a result that can be used as a properly escaped data value in an SQL statement. The string is returned enclosed by single quotation marks and with each instance of backslash, single quote ('), ASCII NUL, or Control+Z preceded by a backslash.

If the argument is NULL, the return value is the word “NULL” without enclosing single quotation marks.

SELECT str, quote(str) FROM test_quote;

+-------------------+----------------------+

| str | _c1 |

+-------------------+----------------------+

| Don't say "lazy" | 'Don\'t say "lazy"' |

+-------------------+----------------------+JSON_READ parses the given json according to the given complex type specification.

WITH parsed AS (

SELECT json_read('[{"user_id": 1, "item_ids": [1, 5]}, {"user_id": 2, "item_ids": [2, 8]}]', 'array<struct<user_id:int,item_ids:array<int>>>') AS users

)

SELECT

users[0].user_id AS user_id_0, users[0].item_ids AS item_ids_0,

users[1].user_id AS user_id_0, users[1].item_ids AS item_ids_0,

users

FROM parsed;

+------------+-------------+--------------+---------------+----------------------------------------------------+

| user_id_0 | item_ids_0 | user_id_0_1 | item_ids_0_1 | users |

+------------+-------------+--------------+---------------+----------------------------------------------------+

| 1 | [1,5] | 2 | [2,8] | [{"user_id":1,"item_ids":[1,5]},{"user_id":2,"item_ids":[2,8]}] |

+------------+-------------+--------------+---------------+----------------------------------------------------+The following hyperbolic built-in functions for MATH usage have been added: sinh, cosh, tanh.

This function rounds timestamp agr1 to the beginning of window interval where it belongs to.

SELECT tumbling_window(cast('2024-05-12 15:23:59' as timestamp), interval '2' MINUTES);

+------------------------+

| _c0 |

+------------------------+

| 2024-05-12 15:22:00.0 |

+------------------------+Introduced the FORMAT clause to CAST statements as well as to SQL:2016 datetime formats. Here is a limited list of datetime formats supported: YYYY, MM, DD, HH, MI, SS, YYY, YY, Y, RRRR, RR, DDD, HH12, HH24, SSSSS, FF[1..9], AM/A.M., PM/P.M., TZH, TZM.

SELECT

cast(cast('2024-05-12 15:23:59' as timestamp) as string format 'yyyy-MM-dd'),

cast('2024/05/12 15:23:59' as timestamp format 'yyyy/MM/dd HH24:MI:ss');

+-------------+------------------------+

| _c0 | _c1 |

+-------------+------------------------+

| 2024-05-12 | 2024-05-12 15:23:59.0 |

+-------------+------------------------+- array_min(array(obj1, obj2,...)): Returns the minimum value in an array with elements for which order is supported, returns null if array is empty

- array_max(array(obj1, obj2,...)): Returns the maximum value in an array with elements for which order is supported, returns null if array is empty

- array_distinct(array(obj1, obj2,...)): Returns an array of the same type as the input argument where all duplicate values have been removed.

- array_join(array, delimiter, replaceNull): Concatenate the elements of an array with a specified delimiter Example:

- array_slice(array, start, length): Returns the subset or range of elements from an array (subarray)

- array_except(array1, array2): Returns an array of the elements in array1 but not in array2, without duplicates.

- array_intersect(array1, array2): Returns an array of the elements in the intersection of array1 and array2, without duplicates.

- array_union(array1, array2): Returns an array of the elements in the union of array1 and array2 without duplicates.

- array_remove(array, element): Removes all occurrences of element from array.

SELECT

percentile_cont(ws_sales_price, 0.5),

percentile_disc(ws_sales_price, 0.5)

FROM web_sales;If approximate results are acceptable, there is a class of specialized algorithms called streaming algorithms, or sketches, that can produce results orders of magnitude faster and with mathematically proven error bounds. For interactive queries there may not be other viable alternatives, and in the case of real-time analysis, sketches are the only known solution.

Hive4 integrate with Apache Datasketches library more closely

- HyperLogLog

- ds_hll_estimate

- ds_hll_estimate_bounds

- ds_hll_sketch

- ds_hll_stringify

- ds_hll_union

- ds_hll_union_f

- Theta Sketch

- ds_theta_estimate

- ds_theta_exclude

- ds_theta_intersect

- ds_theta_intersect_f

- ds_theta_sketch

- ds_theta_union

- ds_theta_union_f

- Tuple Sketch

- ds_tuple_arrayofdouble_estimate

- ds_tuple_arrayofdouble_estimate_bounds

- ds_tuple_arrayofdouble_means

- etc...

This function returns a string describing the type of the argument.

SELECT typeof('text'), typeof(1), typeof(CAST(NULL AS bigint)), typeof(array(1.0));

+---------+------+---------+----------------------+

| _c0 | _c1 | _c2 | _c3 |

+---------+------+---------+----------------------+

| string | int | bigint | array<decimal(1,0)> |

+---------+------+---------+----------------------+This function helps to retrieve each column name and it's data type.

SELECT get_sql_schema('select ws_item_sk, max(ws_quantity) as max_quantity from web_sales group by ws_item_sk');

+---------------+-----------+

| col_name | col_type |

+---------------+-----------+

| ws_item_sk | bigint |

| max_quantity | int |

+---------------+-----------+The following limitations or differences exist between the stable version of Hive 2 (Hive 2020.1) and Hive 4.

While not necessarily a limitation, for ORDER_BY, the 2023.1 default ascending sort order (ASC) is NULLS LAST, and the default descending sort order (DESC) is NULLS FIRST.

ORDER BY name;

null aaa

aaa -> bbb

bbb nullAnd, to use traditional sorting you can use the following keyword:

ORDER BY name NULLS FIRST;or

ORDER BY name NULLS LAST;The default mask has changed from MD5 to SHA256.

In Hive 4, you will see a change in the number of log messages related to time index filtering in output log. These messages will look something like this:

** WARNING: time index filtering is not set on suprith_test.million_partitions!

** This query could be very slow as a result.

** If you used 'unix_timestamp' please modify your query to use TD_SCHEDULED_TIME instead

** or rewrite the condition using TD_TIME_RANGE

** Please see https://docs.treasuredata.com/display/public/PD/Hive+Performance+Tuning#HivePerformanceTuning-LeveragingTime-basedPartitioningHive3 added the hive.remove.orderby.in.subquery property to disable sorting in subqueries (see HIVE-6348.This feature continues in Hive 4. Functions that rely on UDF sorting, such as TD_SESSIONIZE are not affected by this change.

The strings "true" and "false" can now be evaluated as TRUE/FALSE using the AbstractGenericUDAFResolver. However, strings such as "aaa" still return true, but functions like TD_AVGIF and TD_SUMIF will return different results given “false” as an argument.

SELECT

TD_SUMIF(1, "true"), --> 1

TD_SUMIF(1, "false"), --> 1

TD_SUMIF(1, "aaa"), --> 1SELECT

TD_SUMIF(1, "true"), --> 1

TD_SUMIF(1, "false"), --> null

TD_SUMIF(1, "aaa"), --> 1While this feature is support, be aware that it is currently impossible to change the default time zone used during the execution of the query. Therefore, the “local” time zone used will always be GMT+0 (UTC).

In some cases, common table expressions (CTEs) can fail if you use a null literal in a SELECT statement. For example, here is CTE that fails:

WITH x AS (SELECT null AS mofu, 'POST' AS method)

SELECT t1.method

FROM sample_datasets.www_access t1

WHERE EXISTS (SELECT 1 FROM x t2 WHERE t1.method=t2.method)

UNION ALL

SELECT x.method FROM x

ql.Driver: FAILED: SemanticException [Error 10305]: CREATE-TABLE-AS-SELECT creates a VOID type, please use CAST to specify the type, near field: mofu

org.apache.hadoop.hive.ql.parse.SemanticException: CREATE-TABLE-AS-SELECT creates a VOID type, please use CAST to specify the type, near field: mofuYou should explicitly cast the null literal to the expected type. For example, in this CTE null is cast to type string:

WITH x AS (SELECT CAST(null AS STRING) AS mofu, 'POST' AS method)Stateful UDFs such as TD_X_RANK cannot be used anywhere other than in the SELECT list. For example, specifying TD_X_RANK in the HAVING clause causes this query to fail:

SELECT col1, col2 FROM table

HAVING TD_X_RANK(col2) < 10

FAILED: SemanticException [Error 10084]: Stateful UDF's can only be invoked in the SELECT list

org.apache.hadoop.hive.ql.parse.SemanticException: Stateful UDF's can only be invoked in the SELECT listTo fix this you use a window function with a QUALIFY filter.

SELECT

col1, col2,

ROW_NUMBER() OVER(PARTITION BY col1 ORDER BY col2 DESC) AS rank

FROM table

QUALIFY

rank < 10SELECT

col1, col2,

FROM table

QUALIFY

ROW_NUMBER() OVER(PARTITION BY col1 ORDER BY col2 DESC) < 10In some cases, due to query compilation optimizations introduced in Hive 2023.1, HAVING clauses that reference aliases inside CTEs no longer work.

WITH x (

SELECT col1, COUNT(*) AS num FROM table1

GROUP BY 1

HAVING NUM > 10

)

SELECT * FROM table2 WHERE col1 IN (SELECT col1 FROM x)

FAILED: CalciteSubquerySemanticException Encountered Select alias 'num' in having clause 'num > 10' This non standard behavior is not supported with cbo on. Turn off cbo for these queries.Rewrite your CTE so the HAVING clause does not reference an alias.

WITH x (

SELECT col1, COUNT(*) AS num FROM table1

GROUP BY 1

HAVING COUNT(*) > 10

)

SELECT * FROM table2 WHERE col1 IN (SELECT col1 FROM x)Nested parentheses within subqueries are no longer allowed in Hive 2023.1.

SELECT col1, AVG(col2)

FROM (

(

SELECT col1, col2 FROM table WHERE time = 252691200

)

) sub

GROUP BY col1

org.apache.hadoop.hive.ql.parse.ParseException: line 6:0 cannot recognize input near ')' 'sub' 'GROUP' in subquery sourceRewrite your subquery without nesting parentheses.

SELECT col1, AVG(col2)

FROM (

SELECT col1, col2 FROM table WHERE time = 252691200

) sub

GROUP BY col12023.1 throws a syntax error when you specify a numeric value for YEAR. The same applies to other UDFs(e.g. MONTH, DAY, HOUR, MINUTE, SECOND and WEEKOFYEAR) that handle dates. The value you specify for these UDFs must be a timestamp string.

SELECT YEAR(2024)

FAILED: SemanticException [Error 10016]: Line 1:12 Argument type mismatch '2024': year does not take INT typeTo resolve this issue specify a valid timestamp string.

SELECT YEAR('2024-01-01'){any db name}.TD_••• succeeds with 2020.1, but fails with 2023.1.

Here is an example of how TD_URL_DECODE with a database name fails in 2023.1:

SELECT sample_datasets.TD_URL_DECODE(path) FROM sample_datasets.www_access

FAILED: SemanticException [Error 10011]: Invalid function sample_datasets.TD_URL_DECODEYou can avoid the problem by removing the database name from the UDF identifier.

SELECT TD_URL_DECODE(path) FROM sample_datasets.www_accessHive 2023.1 introduced the following reserved keywords. If you use any of these keywords as table names or column names, you will need to quote them with backticks.

- ANY

- APPLICATION

- COMPACTIONID

- CONNECTORa

- CONNECTORS

- CONVERT

- DDL

- DEC

- FORCE

- LEADING

- NUMERIC

- OLDER

- PKFK_JOIN

- PREPARE

- QUALIFY

- REAL

- SOME

- SYNC

- TIMESTAMPLOCALTZ

- THAN

- TRAILING

- UNIQUE

The keywords for Hive 2023.1 are almost identical to the Keywords for Hive 4.0.0 except for TIME which is non-reserved in the Treasure Data environment.

| Version | Non-reserved Keywords | Reserved Keywords |

|---|---|---|

| Hive 1.2.0 | ADD, ADMIN, AFTER, ANALYZE, ARCHIVE, ASC, BEFORE, BUCKET, BUCKETS, CASCADE, CHANGE, CLUSTER, CLUSTERED, CLUSTERSTATUS, COLLECTION, COLUMNS, COMMENT, COMPACT, COMPACTIONS, COMPUTE, CONCATENATE, CONTINUE, DATA, DATABASES, DATETIME, DAY, DBPROPERTIES, DEFERRED, DEFINED, DELIMITED, DEPENDENCY, DESC, DIRECTORIES, DIRECTORY, DISABLE, DISTRIBUTE, ENABLE, ESCAPED, EXCLUSIVE, EXPLAIN, EXPORT, FIELDS, FILE, FILEFORMAT, FIRST, FORMAT, FORMATTED, FUNCTIONS, HOLD_DDLTIME, HOUR, IDXPROPERTIES, IGNORE, INDEX, INDEXES, INPATH, INPUTDRIVER, INPUTFORMAT, ITEMS, JAR, KEYS, LIMIT, LINES, LOAD, LOCATION, LOCK, LOCKS, LOGICAL, LONG, MAPJOIN, MATERIALIZED, METADATA, MINUS, MINUTE, MONTH, MSCK, NOSCAN, NO_DROP, OFFLINE, OPTION, OUTPUTDRIVER, OUTPUTFORMAT, OVERWRITE, OWNER, PARTITIONED, PARTITIONS, PLUS, PRETTY, PRINCIPALS, PROTECTION, PURGE, READ, READONLY, REBUILD, RECORDREADER, RECORDWRITER, REGEXP, RELOAD, RENAME, REPAIR, REPLACE, REPLICATION, RESTRICT, REWRITE, RLIKE, ROLE, ROLES, SCHEMA, SCHEMAS, SECOND, SEMI, SERDE, SERDEPROPERTIES, SERVER, SETS, SHARED, SHOW, SHOW_DATABASE, SKEWED, SORT, SORTED, SSL, STATISTICS, STORED, STREAMTABLE, STRING, STRUCT, TABLES, TBLPROPERTIES, TEMPORARY, TERMINATED, TINYINT, TOUCH, TRANSACTIONS, UNARCHIVE, UNDO, UNIONTYPE, UNLOCK, UNSET, UNSIGNED, URI, USE, UTC, VIEW, WHILE, YEAR | ALL, ALTER, AND, ARRAY, AS, AUTHORIZATION, BETWEEN, BIGINT, BINARY, BOOLEAN, BOTH, BY, CASE, CAST, CHAR, COLUMN, CONF, CREATE, CROSS, CUBE, CURRENT, CURRENT_DATE, CURRENT_TIMESTAMP, CURSOR, DATABASE, DATE, DECIMAL, DELETE, DESCRIBE, DISTINCT, DOUBLE, DROP, ELSE, END, EXCHANGE, EXISTS, EXTENDED, EXTERNAL, FALSE, FETCH, FLOAT, FOLLOWING, FOR, FROM, FULL, FUNCTION, GRANT, GROUP, GROUPING, HAVING, IF, IMPORT, IN, INNER, INSERT, INT, INTERSECT, INTERVAL, INTO, IS, JOIN, LATERAL, LEFT, LESS, LIKE, LOCAL, MACRO, MAP, MORE, NONE, NOT, NULL, OF, ON, OR, ORDER, OUT, OUTER, OVER, PARTIALSCAN, PARTITION, PERCENT, PRECEDING, PRESERVE, PROCEDURE, RANGE, READS, REDUCE, REVOKE, RIGHT, ROLLUP, ROW, ROWS, SELECT, SET, SMALLINT, TABLE, TABLESAMPLE, THEN, TIMESTAMP, TO, TRANSFORM, TRIGGER, TRUE, TRUNCATE, UNBOUNDED, UNION, UNIQUEJOIN, UPDATE, USER, USING, UTC_TMESTAMP, VALUES, VARCHAR, WHEN, WHERE, WINDOW, WITH |

| Hive 2.0.0 | removed: HOLD_DDLTIME, IGNORE, NO_DROP, OFFLINE, PROTECTION, READONLY, REGEXP, RLIKE added: AUTOCOMMIT, ISOLATION, LEVEL, OFFSET, SNAPSHOT, TRANSACTION, WORK, WRITE | added: COMMIT, ONLY, REGEXP, RLIKE, ROLLBACK, START |

| Hive 2.1.0 | added: ABORT, KEY, LAST, NORELY, NOVALIDATE, NULLS, RELY, VALIDATE | added: CACHE, CONSTRAINT, FOREIGN, PRIMARY, REFERENCES |

| Hive 2.2.0 | removed: MINUS added: CACHE, DAYS, DAYOFWEEK, DUMP, HOURS, MATCHED, MERGE, MINUTES, MONTHS, QUARTER, REPL, SECONDS, STATUS, VIEWS, WEEK, WEEKS, YEARS | removed: CACHE added: EXCEPT, EXTRACT, FLOOR, GROUPING, INTEGER, MINUS, PRECISION |

| Hive 2.3.0 | removed: MERGE added: DETAIL, EXPRESSION, OPERATOR, SUMMARY, VECTORIZATION, WAIT | added: MERGE |

| Hive 3.0.0 | removed: PRETTY added: ACTIVATE, ACTIVE, ALLOC_FRACTION, CHECK, DEFAULT, DO, ENFORCED, KILL, MANAGEMENT, MAPPING, MOVE, PATH, PLAN, PLANS, POOL, QUERY, QUERY_PARALLELISM, REOPTIMIZATION, RESOURCE, SCHEDULING_POLICY, UNMANAGED, WORKLOAD, ZONE | removed: PARTIALSCAN added: ANY, APPLICATION, DEC, NUMERIC, SYNC, TIME, TIMESTAMPLOCALTZ, UNIQUE |

| Hive 3.1.0 | N/A | N/A |

| Hive 4.0.0 | added: AST, AT, BRANCH, CBO, COST, CRON, DCPROPERTIES, DEBUG, DISABLED, DISTRIBUTED, ENABLED, EVERY, EXECUTE, EXECUTED, EXPIRE_SNAPSHOTS, IGNORE, JOINCOST, MANAGED, MANAGEDLOCATION, OPTIMIZE, REMOTE, RESPECT, RETAIN, RETENTION, SCHEDULED, SET_CURRENT_SNAPSHOT, SNAPSHOTS, SPEC, SYSTEM_TIME, SYSTEM_VERSION, TAG, TRANSACTIONAL, TRIM, TYPE, UNKNOWN, URL, WITHIN | added: COMPACTIONID, CONNECTOR, CONNECTORS, CONVERT, DDL, FORCE, LEADING, OLDER, PKFK_JOIN, PREPARE, QUALIFY, REAL, SOME, THAN, TRAILING |

| 2023.1 | added: TIME | removed: TIME |