Performance tuning your environment is recommended as a regular part of maintaining your system. Here are some performance tuning tips:

- Leveraging Time-based Partitioning

- Set Custom Schema

DISTRIBUTE BY ... SORT BYversusORDER BY- Avoid

SELECT count(DISTINCT field) FROM tbl - Considering the Cardinality within

GROUP BY - Efficient Top-k Query Processing using

each_top_k - Exploding Multiple Arrays at the Same Time with

TD_NUMERIC_RANGEandTD_ARRAY_INDEX

- Basic knowledge of Treasure Data.

- Basic knowledge of the Hive Query Lanaguage

All imported data is automatically partitioned into hourly buckets, based on the time field within each data record. By specifying the time range to query, you avoid reading unnecessary data and can thus speed up your query significantly.

When the time field within the WHERE clause is specified, the query parser will automatically detect the partitions to process. This auto detection will not work if you specify the time with float instead of int.

[GOOD]: SELECT field1, field2, field3 FROM tbl WHERE time > 1349393020

[GOOD]: SELECT field1, field2, field3 FROM tbl WHERE time > 1349393020 + 3600

[GOOD]: SELECT field1, field2, field3 FROM tbl WHERE time > 1349393020 - 3600

[BAD]: SELECT field1, field2, field3 FROM tbl WHERE time > 13493930200 / 10

[BAD]: SELECT field1, field2, field3 FROM tbl WHERE time > 1349393020.00

[BAD]: SELECT field1, field2, field3 FROM tbl WHERE time BETWEEN 1349392000 AND 1349394000An easier way to slice the data is to use TD_TIME_RANGE UDF.

[GOOD]: SELECT ... WHERE TD_TIME_RANGE(time, "2013-01-01 PDT")

[GOOD]: SELECT ... WHERE TD_TIME_RANGE(time, "2013-01-01", "PDT", NULL)

[GOOD]: SELECT ... WHERE TD_TIME_RANGE(time, "2013-01-01",

TD_TIME_ADD("2013-01-01", "1day", "PDT"))However, if you use division in TD_TIME_RANGE, time partition optimization doesn’t work. For instance, the following conditions disable optimization.

[BAD]: SELECT ... WHERE TD_TIME_RANGE(time, TD_SCHEDULED_TIME() / 86400 * 86400))

[BAD]: SELECT ... WHERE TD_TIME_RANGE(time, 1356998401 / 86400 * 86400))

All tables have two fields:

vtime

You can also set custom schema on the tables.

td schema:set testdb www_access action:string user:int

td query -w -d testdb "SELECT user, COUNT(1) AS cnt

FROM www_access

WHERE action='login'

GROUP BY user ORDER BY cnt DESC"After setting the schema, queries issued with named columns instead of v will use the schema information to achieve a more optimized execution path. In particular, GROUP BY performance will improve significantly.

In Hive, ORDER BY slows because it forces all the data to go into the same reducer node. By doing this, Hive ensures that the entire dataset is totally ordered.

Sometimes we do not require total ordering. For example, suppose you have a table called user_action_tablewhere each row has user_id, action, and time. Your goal is to order them by time per user_id.

If you are doing this with ORDER BY, you would run

SELECT time, user_id, action FROM user_action_table

ORDER BY user_id, timeHowever, you can achieve the same result with

SELECT time, user_id, action FROM user_action_table

DISTRIBUTE BY user_id SORT BY user_id, timeThe reason is because all the rows belonging to the same user_id go to the same reducer ("DISTRIBUTE BY user_id") and in each reducer, rows are sorted by time ("SORT BY time"). This is faster than the other query because it uses multiple reducers as opposed to a single reducer.

You can learn more about the differences between SORT BY and ORDER BY.

This query looks familiar to SQL users, but this query is very slow because only one reducer is used to process the request.

SELECT count(DISTINCT field) FROM tblRewrite the query to leverage multiple reducers:

SELECT

count(1)

FROM (SELECT DISTINCT field FROM tbl) tOften, GROUP BY can be faster if you carefully order a list of fields within the GROUP BY clause in order of high cardinality.

| SQL Syntax | |

|---|---|

| bad | SELECT GROUP BY gender, uid |

| good | SELECT GROUP BY uid, gender |

Efficient processing of Top-k queries is a crucial requirement in many interactive environments that involve massive amounts of data. The TD Hive extension each_top_k helps run Top-k processing efficiently.

- Suppose the following table as the input

| student | class | score |

|---|---|---|

| 1 | b | 70 |

| 2 | a | 80 |

| 3 | a | 90 |

| 4 | b | 50 |

| 5 | a | 70 |

| 6 | b | 60 |

- Then, list top-2 students for each class

| student | class | score | rank |

|---|---|---|---|

| 3 | a | 90 | 1 |

| 2 | a | 80 | 2 |

| 1 | b | 70 | 1 |

| 6 | b | 60 | 2 |

The standard way using SQL window function would be as follows:

SELECT

student, class, score, rank

FROM (

SELECT

student, class, score,

rank() over (PARTITION BY class ORDER BY score DESC) as rank

FROM

table

) t

WHERE rank <= 2An alternative and efficient way to compute top-k items using each_top_k is as follows:

SELECT

each_top_k(

2, class, score,

class, student -- output other columns in addition to rank and score

) as (rank, score, class, student)

FROM (

SELECT * FROM table

CLUSTER BY class -- Mandatory for `each_top_k`

) tCLUSTER BY x is a synonym of DISTRIBUTE BY x CLASS SORT BY x and required when using each_top_k.

each_top_k is beneficial where the number of grouping keys are large. If the number of grouping keys are not so large (e.g., less than 100), consider using rank() over instead.

The function signature of each_top_k is follows:

each_top_k(int k, ANY group, double value, arg1, arg2, ..., argN)

returns a relation (int rank, double value, arg1, arg2, .., argN).Any number types or timestamp are accepted for the type of value but it MUST be not NULL. Do null handling like if(value is null, -1, value) to avoid null.

If k is less than 0, reverse order is used and tail-K records are returned for each group.

The ranking semantics of each_top_k follows SQL’s dense_rank and then limits results by k. See Hivemall User Guide for further information.

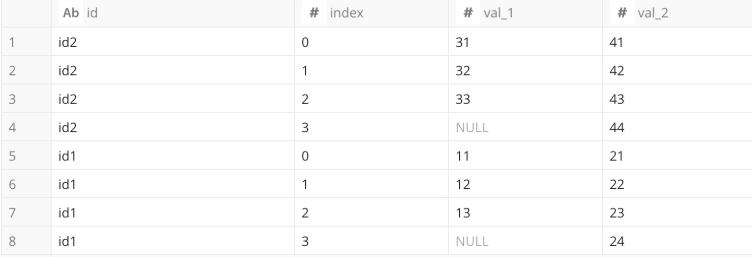

The combination of TD_NUMERIC_RANGE and TD_ARRAY_INDEX allows you to emit all the elements of an array into multiple rows using the LATERAL VIEW.

WITH t1 as (

-- Generate sample data

select 'id1' as id, ARRAY(11,12,13) as a, ARRAY(21,22,23,24) as b

union all

select 'id2' as id, ARRAY(31,32,33) as a, ARRAY(41,42,43,44) as b

)

select

id,

n as index,

TD_ARRAY_INDEX( a, n ) as val_1,

TD_ARRAY_INDEX( b, n ) as val_2

from

( select id, a, b from t1 ) t2

LATERAL VIEW

TD_NUMERIC_RANGE( size( b ) ) n1 as n