Prior to it's rebranding in 2021, the Trino query engine was known as PrestoSQL or more simply Presto. Treasure Data supported several earlier versions of Presto, and the current supported version is Presto 350. Newer versions of Presto are now supported under the Trino branding, and support for Trino 423 has now been introduced. For the immediate future, you will see both Presto and Trino used in Treasure Data documentation. New functionality or new features will be identified as being Trino; however, documentation for existing functions and features that are common to both Presto and Trino will continue to be identified as Presto.

Trino is an open-source, distributed SQL query engine. Trino can query data where it is stored, without needing to move data into a separate analytics system. Treasure Data has customized Trino to talk directly with our distributed columnar storage layer.

The two most popular engines are Hive and Trino (Presto):

Hive — Designed for querying of large data systems in the open-source Hadoop platform. Hive 2023.1 converts SQL-like queries into Tez jobs for easy execution and processing of extremely large volumes of data. Hive is optimized for query throughput and is described as a pull-model.

Trino (Presto) — Both Trino 423 and Presto 350 are designed for fast, interactive queries on data in HDFS, and others. Trino (Presto) is optimized for latency and is often described as a push model.

| Hive | Trino (Presto) | |

|---|---|---|

| Optimized for | Throughput | Interactivity |

| SQL Standardized fidelity | HiveQL (a subset of common data warehousing SQL) | Designed to comply with ANSI SQL |

| Window functions | Yes | Yes |

| Large JOINs | Very good for large fact-to-fact joins | Optimized for star scheme joins (1 large fact table and many smaller dimension tables) |

| Maximum Query Length | No Hard Limit | 1MB |

About Hive Query Engine

Apache Hive is a data warehouse system built on top of Apache Hadoop that facilitates easy data summarization, ad-hoc queries, and the analysis of large datasets stored in various databases and file systems that integrate with Hadoop. Hive offers a simple way to apply structure to large amounts of unstructured data and then perform batch SQL-like queries on that data.

The Treasure Data Hive service (TD Hive) provides batch data processing on data stored in Treasure Data’s data lake, based on Apache Hive. TD eliminates the need to run your own Hadoop clusters to handle Hive processing. Instead, Treasure Data operates compute clusters for running Hive jobs.

The Customer Data Platform (CDP) application uses Hive jobs for some of its internal operations, and you can also run your own Hive jobs. You can submit SELECT or DML queries using Hive’s query language, using TD Console, API calls, the TD Toolbelt, or from TD workflows. The service queues, executes the queries, and returns the results. You can also design your system so that results are delivered to destinations specified in your Result Output.

The Hive query language (HiveQL) is the primary data processing method for Treasure Data. HiveQL is powered by Apache Hive. Treasure Data is a CDP that allows users to collect, store, and analyze their data on the cloud. Treasure Data manages its own Hadoop cluster, which accepts queries from users and executes them using Apache Tez. HiveQL is one of the languages it supports.

About Trino Distributed Query Engine

Trino is an open-source, distributed SQL query engine. Trino can query data where it is stored, without needing to move data into a separate analytics system. Treasure Data has customized Trino to talk directly with our distributed columnar storage layer.

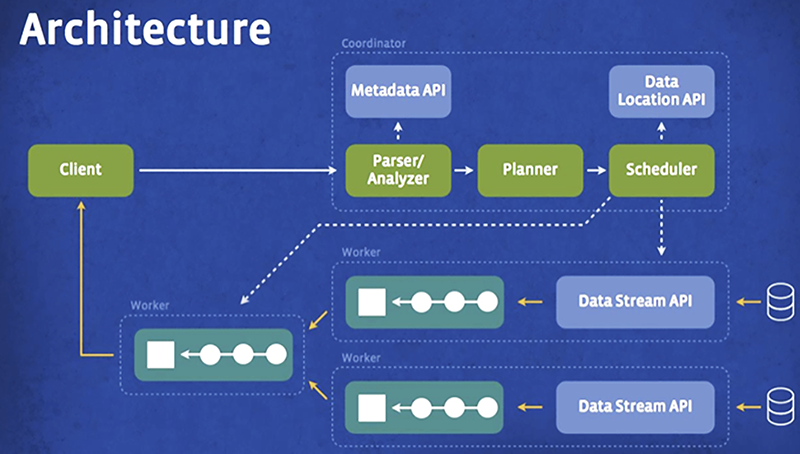

Trino(Presto) uses an architecture similar to a classic massively parallel processing (MPP) database management system.

It has one coordinator node working in sync with multiple worker nodes. A user can submit their SQL query to the coordinator which uses a custom query and execution engine to parse, plan, and schedule a distributed query plan across the worker nodes. Trino(Presto) is designed to support standard ANSI SQL semantics, including complex queries, aggregations, joins, left/right outer joins, sub-queries, window functions, distinct counts, and approximate percentiles.

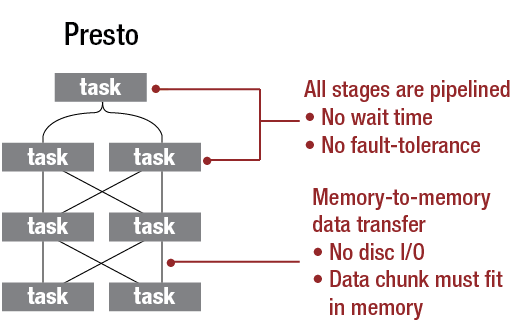

After a query is compiled, Trino(Presto) processes the request into multiple stages across the worker nodes. All processing is in-memory, and pipelined across the network between stages, to avoid any unnecessary I/O overhead.

Use Trino(Presto) to run fast analytic queries against data of any size. This is possible because Trino(Presto) is optimized for latency.

Trino(Presto) has a limitation on the maximum amount of memory that each task in a query can store, so if a query requires a large amount of memory, the query simply fails. Such error handling logic (or a lack thereof) is acceptable for interactive queries.

You can access Trino(Presto) using the following:

TD Console

Trino(Presto) JDBC/ODBC Driver

TD Toolbelt

REST API

Data Tank with Trino(Presto)

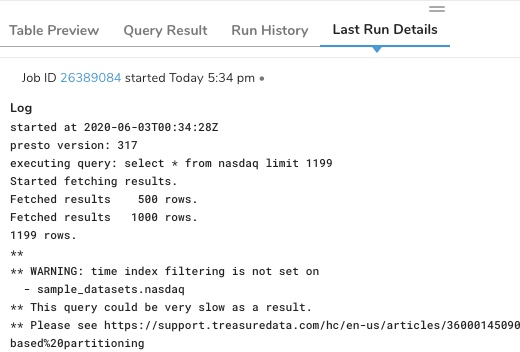

Open the TD Console.

Open the Data Workbench.

Select Queries.

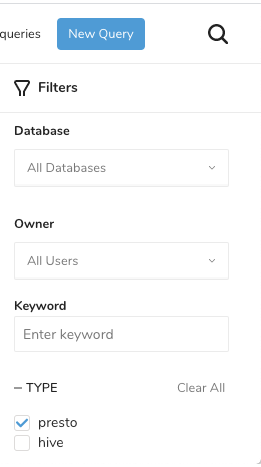

- In the Filters section, select the query type of presto.

- After you run your query, you can check its progress, peak memory usage, and so on.

The US Trino(Presto) gateway resides at api-presto.treasuredata.com/. Additional endpoints can be found here. You can use the TD toolbelt and drivers to connect to Treasure Data and issue queries.

JDBC Driver for Trino(Presto) - can be used by a Java application to connect to, and issue queries to, the Trino(Presto) query engine.

ODBC Driver for Trino(Presto) - can be used by Windows applications to connect to, and issue queries to, the Trino(Presto) query engine.

The Trino JDBC connection has several limitations:

- setAutoCommit(false) throws an exception because transactions are not supported.

- presto-jdbc queries share the same Trino(Presto) resources you have available for any other ad hoc or scheduled queries that you submit from the TD Console or through the REST API.

- presto-jdbc queries are shown in your account job log, with the exception of the following standard entries:

- Query result table

- Query plan

- Progress log

- The query result is converted into JSON before being submitted back to separate tools for display. As such, very large result tables may take some time before being displayed. If you have latency problems, contact Support.

- Certain BI tools issue “information_schema” requests that cause failed queries in our system. This may be an issue in your tool during first use.

If you see any unexpected errors/bugs/logs that occur during beta use, contact Support.

Our aim is to keep this API up as much as possible. Specifically, we expect to achieve a downtime of less than 1 hour total per month.

Setting up the JDBC Driver for Trino(Presto)

Download the Trino(Presto) jdbc driver.

- For using secure HTTPS (port 443) connection you need to use Presto 0.148 or later.

Use the following parameters to connect prestobase on development server:

- Driver name: io.trino.jdbc.TrinoDriver (if you use PrestoSQL Driver, you may use io.prestosql.jdbc.PrestoDriver)

- user: (Your TD API key)

- password: (dummy string. This value will be ignored)

- After Presto-jdbc 0.180, because of a bug, a dummy string needs to be set to the password.

Connection URL examples

The connection URL needs to have the following format:

jdbc:trino://api-presto.treasuredata.com:443/td/(database name)?SSL=true

or

jdbc:presto://api-presto.treasuredata.com:443/td/(database name)?SSL=true

jdbc:trino://api-presto.treasuredata.com:443/td?SSL=true

or

jdbc:trino://api-presto.treasuredata.com:443/td/sample_datasets?SSL=true

jdbc:presto://api-presto.treasuredata.com:443/td?SSL=true

or

jdbc:presto://api-presto.treasuredata.com:443/td/sample_datasets?SSL=trueExample Code

javac Sample.java

java -cp .:trino-jdbc-0.359.jar Sample (your TD API key)time=1412351990, method=GET, path=/category/office

time=1412351976, method=GET, path=/item/software/2265

time=1412351961, method=GET, path=/category/toys

time=1412351947, method=GET, path=/item/electronics/3305

time=1412351932, method=GET, path=/category/softwareSample.java File

import java.sql.*;

class Sample {

public static void main(String[] args) {

if(args.length != 1) {

System.err.println("Provide your TD API key as an argument");

return;

}

String apikey = args[0];

try {

Connection conn = DriverManager.getConnection("jdbc:trino://api-presto.treasuredata.com:443/td/sample_datasets?SSL=true", apikey, "dummy_password");

Statement stmt = conn.createStatement();

try {

ResultSet rs = stmt.executeQuery("SELECT time, method, path from www_access limit 5");

while(rs.next()) {

long time = rs.getLong(1);

String method = rs.getString(2);

String path = rs.getString(3);

System.out.println(String.format("time=%s, method=%s, path=%s", time, method, path));

}

}

finally {

stmt.close();

conn.close();

}

}

catch (Exception e) {

e.printStackTrace();

}

}

}Treasure Data provides a Trino(Presto) API that can integrate directly with our Trino(Presto)-specific ODBC driver. The installer for the driver is currently available for Windows 64-bit platform only. Downloads are available from your account or support representative.

Windows Treasure Data Trino(Presto) ODBC Driver installer TreasureDataPrestoODBC.msi

Tableau Datasource Customization File (.tdc) for Tableau users. Treasure Data Trino(Presto) ODBC Driver.tdc

- Copy this file to My Documents\My Tableau Repositories\Data Sources folder. (This file controls Tableau to use Trino(Presto) compatible SQL queries.)

Windows System Requirements

One of the following operating systems:

Windows Vista, 7, 8, or 10

Windows Server 2008 or later

Visual C++ Redistributable Packages for Visual Studio 2013 installed.

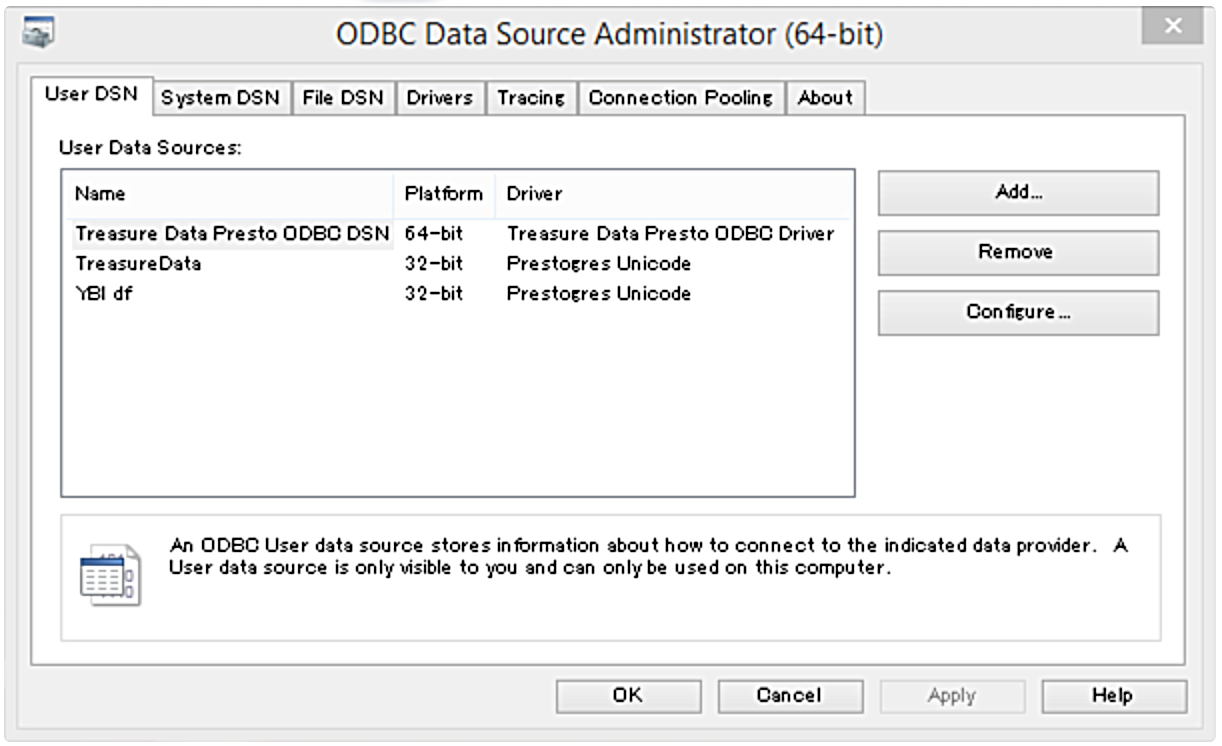

Migrate from Treasure Data ODBC Driver for 32bit

Treasure Data ODBC Driver for 32bit is no longer supported. Replace it with Presto ODBC Driver according to the following instructions. The old driver is no longer supported.

Install the Driver on Windows (64-bit)

- Download the ODBC driver installer TreasureDataPrestoODBC.msi.

- Double-click on the installer package and follow the instructions.

- Open the Start menu (or press the Windows key) and search/select ‘ODBC Data Source Administrator (64-bit)’.

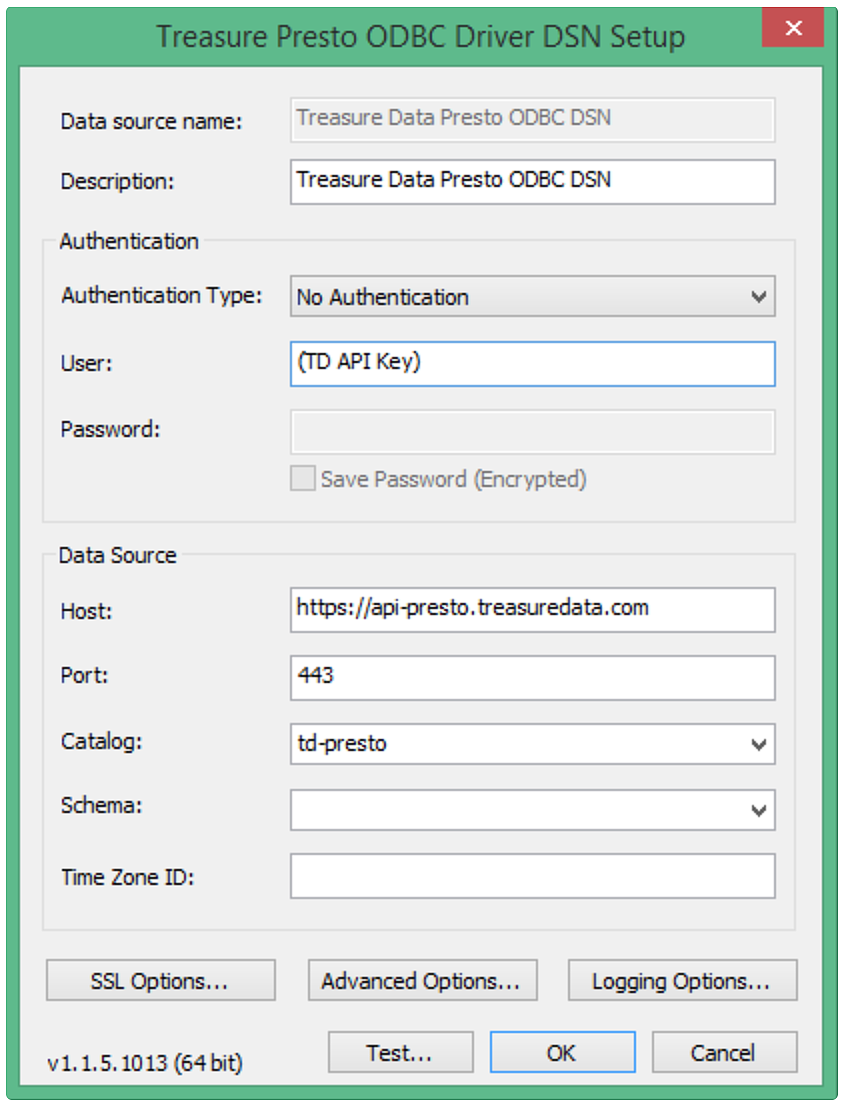

- Select Treasure Data Trino(Presto) ODBC DSN, and select Configure.

- Enter your Master TD API key into the User field.

- Type the following values:

- Authentication Type: No Authentication

- User: YOUR MASTER API KEY

- Host: https://api-presto.treasuredata.com

- Port: 443

- Catalog: td

- Select Test.

- If successful, press OK.

Configure the Proxy Server

If you are connecting to a data source through a proxy server, you must provide connection information for the proxy server.

- Open the ODBC Data Source Administrator where you created the DSN. Select the DSN, select Configure, and then select Proxy Options.

- Check the Use Proxy Server box.

- In the Proxy Host field, enter the hostname or IP address of the proxy server.

- In the Proxy Port field, enter the TCP port number that the proxy server uses to listen for client connections.

- In the Proxy Username field, enter your user name for accessing the proxy server.

- In the Proxy Password field, enter the password of the user name.

- (Optional) To save the proxy server in the Windows registry, select Save Password (Encrypted).

- Click OK.

You can use the Toolbelt command-line (CLI) client to issue queries. Treasure Data supports v0.10.99 and above.

Ensure that TD Toolbelt is installed.

Using the CLI, submit the following code.

td query -w -T presto -d testdb \

"SELECT code, COUNT(1) FROM www_access GROUP BY code"To access Trino(Presto) via REST API, you need an endpoint.

/v3/job/issue/presto/:databaseLearn about the Trino(Presto) query processing flow that joins data from a TD table with data from a Data Tank table.

By default, the Trino(Presto) service limits query execution time to 6 hours. You can manage the Trino(Presto) query execution time in several ways. You might want to limit some or all Trino(Presto) queries to run for a shorter period. For example:

You can limit execution time for ad hoc queries by default, to avoid unexpectedly high Trino(Presto) costs.

You can push users to choose Hive over Trino(Presto) for large and long-running data pipeline jobs.

Contact Support to request custom limits on the following types of queries:

Ad hoc queries from the Console, and queries submitted through ODBC and JDBC

Queries that run as scheduled queries or as steps in workflows

Queries explicitly assigned to any named resource pool

The limits apply to queries created by any user.

If you want to override any other limits for a single query, you can apply the following query hint at the beginning of the query:

-- SET SESSION query_max_execution_time = '6h'

SELECT

COUNT(*)

FROM

ALL_EVENTS

;If limits are set in your account, and you write a query with a hint, then the smallest limit between account, resource-group, query-hint, and global is used.

Supported time measures are

- h (hours)

- m (minutes)

- s (seconds)

All Trino(Presto) queries are limited to 6 hours runtime, regardless of any account-level policy or query hint.

If the limit specified in the query hint is longer than the configured limit, then the shorter configured limit still applies. For example, if a 1-hour limit is configured for ad hoc queries, then you can use the magic comment to limit a query to 5 minutes (5m) but not to increase the limit to 2 hours (2h).

For example, if there is a 30-minute limit configured on scheduled queries, you can run a longer query with the following query hints:

-- SET PROPERTY resource_group = 'overcommit'

-- SET SESSION query_max_execution_time = '3H'You might encounter queries that take a very long time to finish. For example, suppose you query the IP addresses of your website visitors which you want to convert into countries and then make a daily summary of all visitors by each country. The query examines the data of IP addresses from the first visitor to the most recent. This query is processed over and over again for each query, resulting in a query that takes over an hour to complete.

Stream data processing uses intermediate tables where only the new data (data that arrived since the last query) is processed and appended to the rest of the processed data, rather than processing the entire data every time.

For example, you would create an intermediate table by processing all the data you have up to a certain point in time. Then you would schedule a query that processes the new data that arrived since the last query and append the data to the intermediate table. And, finally, you would query the intermediate table for the aggregated data.

The following example uses these elements to create the table:

Sample database: sample_db

Intermediate table: visitor_country

Raw data table: visitor_raw

CREATE TABLE visitor_country AS SELECT

TD_DATE_TRUNK('day', time, 'PST') AS time,

userid,

TD_IP_TO_COUNTRY_CODE(ip) country

FROM visitor_raw

WHERE TD_TIME_RANGE(time, null, '2017-07-01', 'PST')INSERT INTO visitor_country

SELECT

TD_DATE_TRUNK('day', time, 'PST') AS time,

userid,

TD_IP_TO_COUNTRY_CODE(ip) country

FROM visitor_raw

WHERE TD_TIME_RANGE(time, TD_TIME_ADD(TD_SCHEDULED_TIME(), ‘-1d’), TD_SCHEDULED_TIME(), ‘PST’);SELECT country, approx_distinct(userid) num_users

FROM vistitor_country

GROUP BY 1Review one or more of the following topics to learn more about using the Trino(Presto) Query Engine:

- Writing Trino(Presto) Queries - provides examples of different types of Trino(Presto) queries.